Aloys Build Manual

Aloys的环境搭建手册

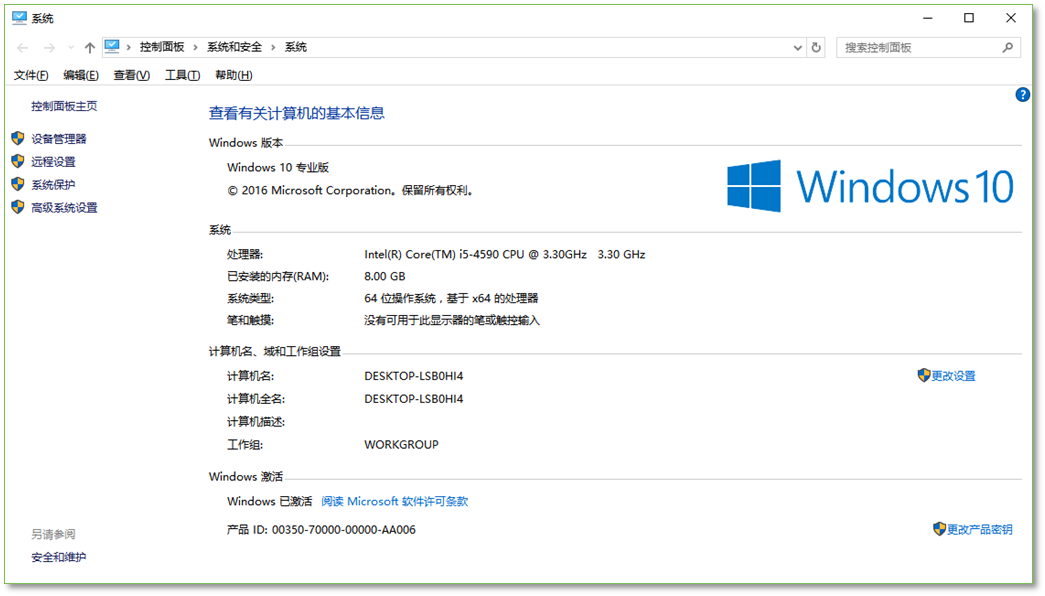

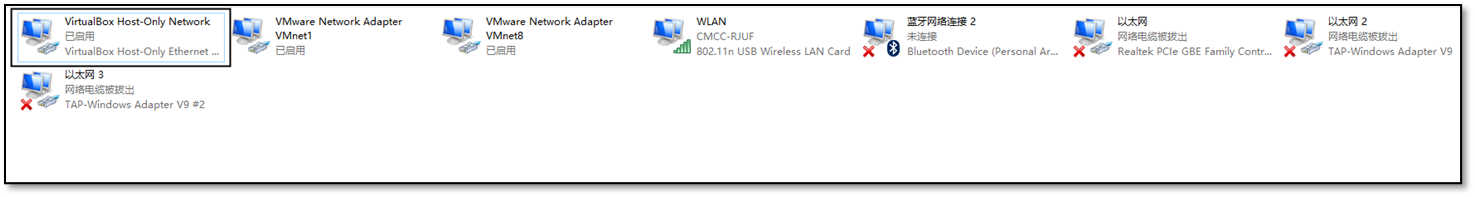

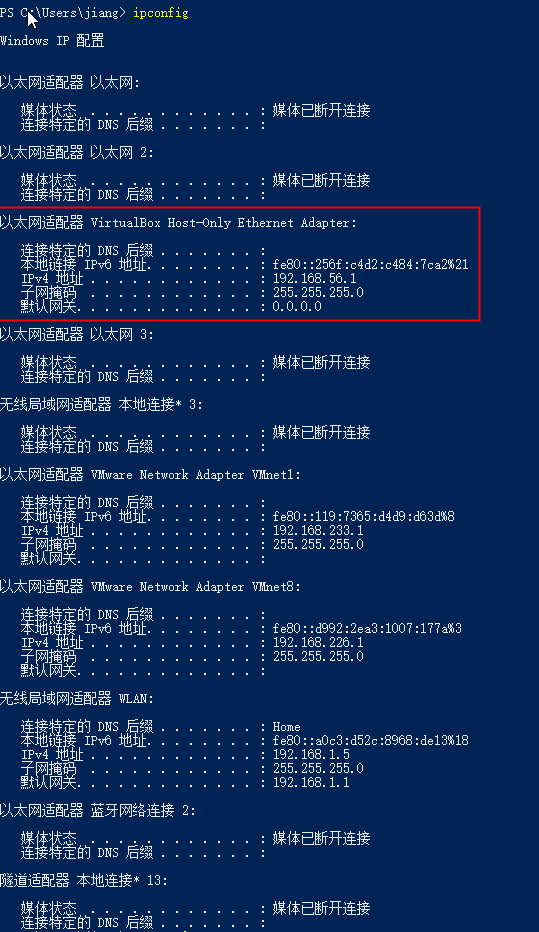

Windows信息:

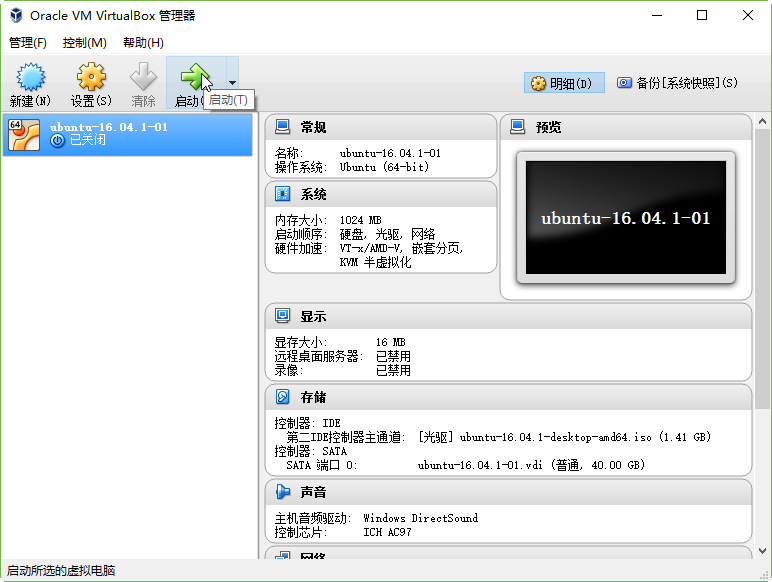

将要配置的集群信息: 利用Virtualbox,安装三台Ubuntu 16.04.1版本的虚拟机,其中一台担任DataNode、SecondaryNameNode、ResourceManager的角色,另外两台担任DataNode、NodeManager的角色。具体组网如下:

| 主机名 | IP | 掩码 | 网关 | DNS服务器 | 角色 |

|---|---|---|---|---|---|

| DESKTOP-LSB0HI4 | 192.168.1.100 | 255.255.255.0 | 192.168.1.1 | 114.114.114.114 | 主机 |

| Ubuntu-01 | 192.168.1.160 | 255.255.255.0 | 192.168.1.1 | 114.114.114.114 | DataNode/SecondaryNameNode/ResourceManager/master/worker/zookeeper/HMaster/hive/pig |

| Ubuntu-02 | 192.168.1.170 | 255.255.255.0 | 192.168.1.1 | 114.114.114.114 | DataNode/NodeManager/worker/zookeeper/HRegion |

| Ubuntu-03 | 192.168.1.180 | 255.255.255.0 | 192.168.1.1 | 114.114.114.114 | DataNode/NodeManager/ worker/zookeeper/HRegion |

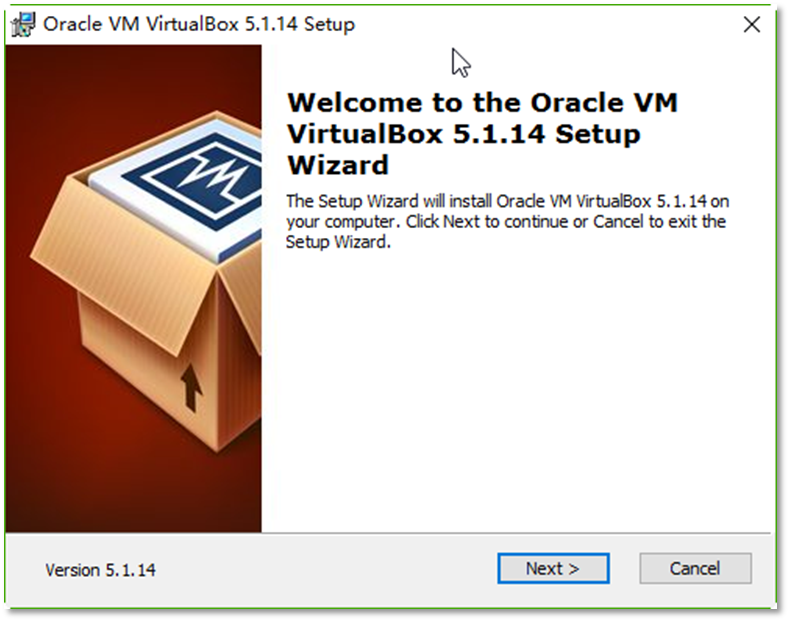

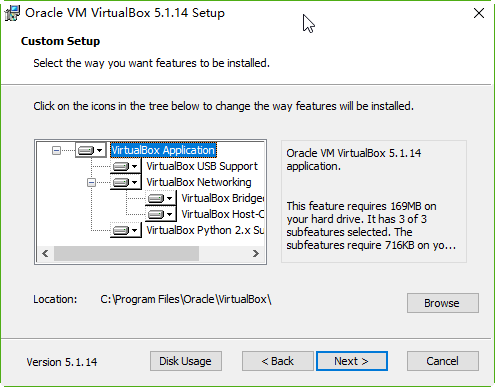

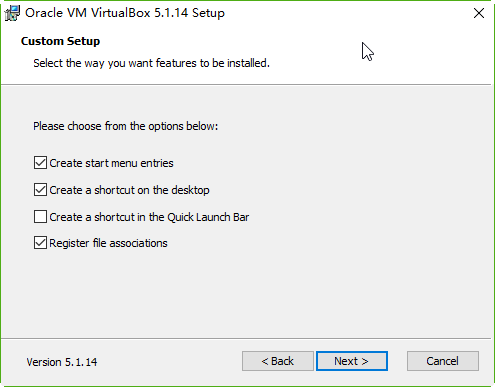

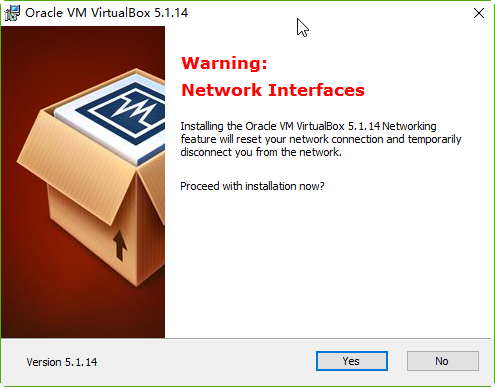

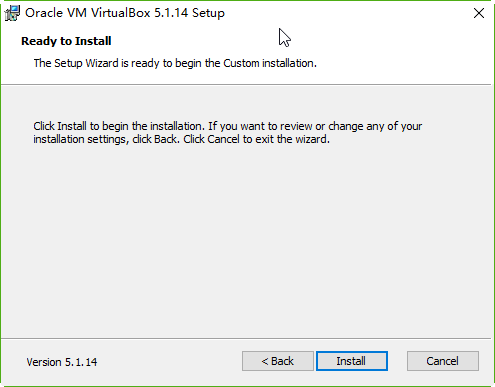

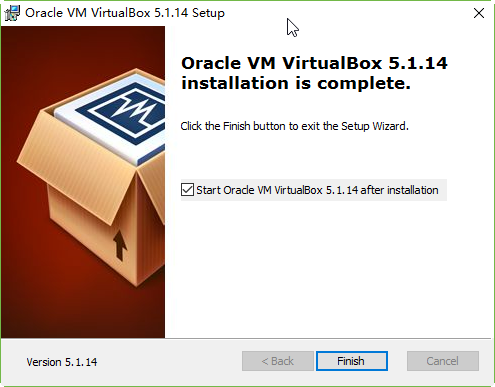

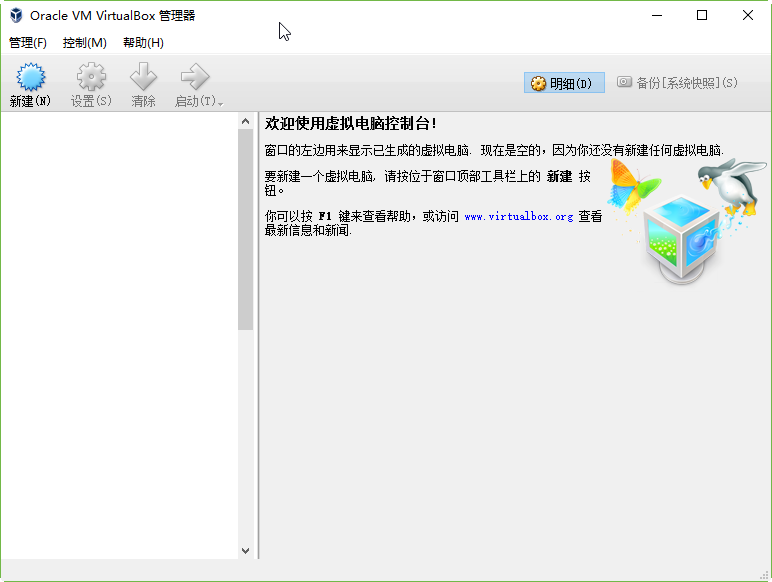

安装VirtualBox

首先需要在主机中安装虚拟机VirtualBox,此次我安装的版本为VirtualBox-5.1.14-112924-Win。

下载地址:https://www.virtualbox.org/

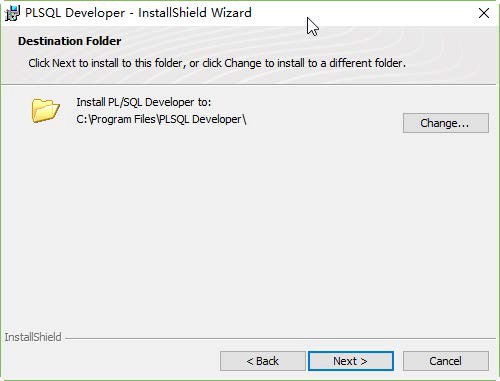

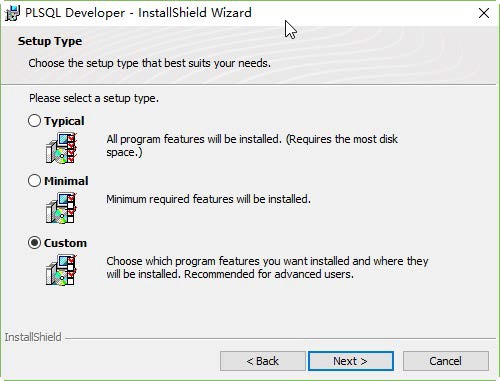

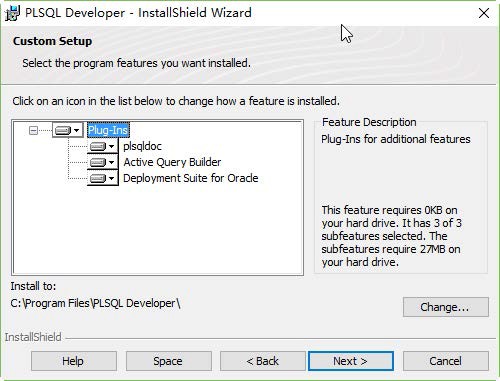

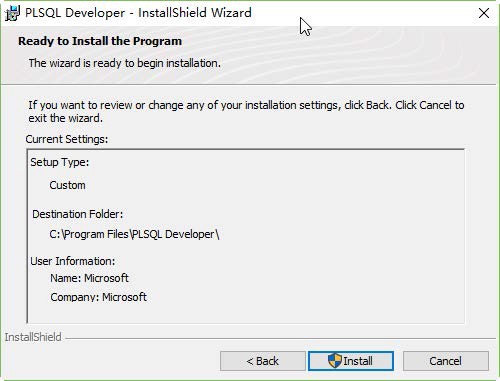

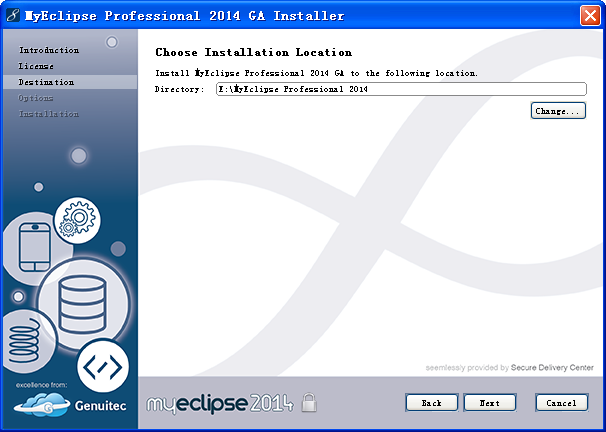

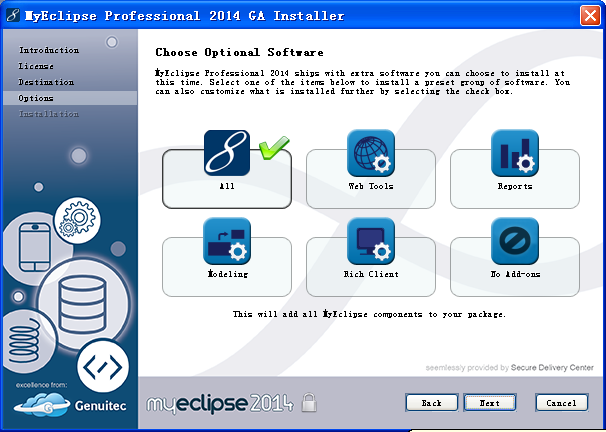

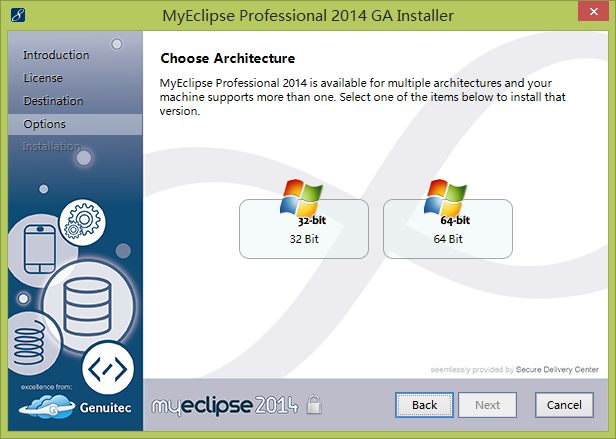

单机VirtualBox-5.1.14-112924-Win.exe,按照所给的说明进行安装:

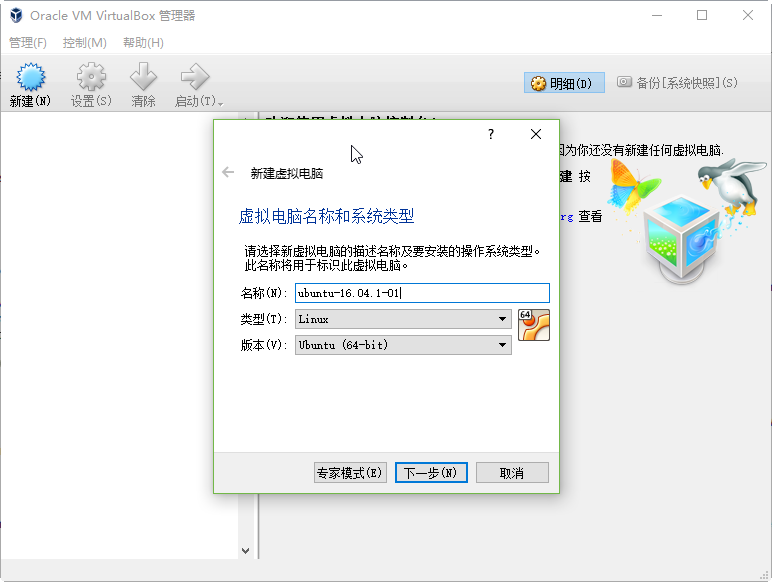

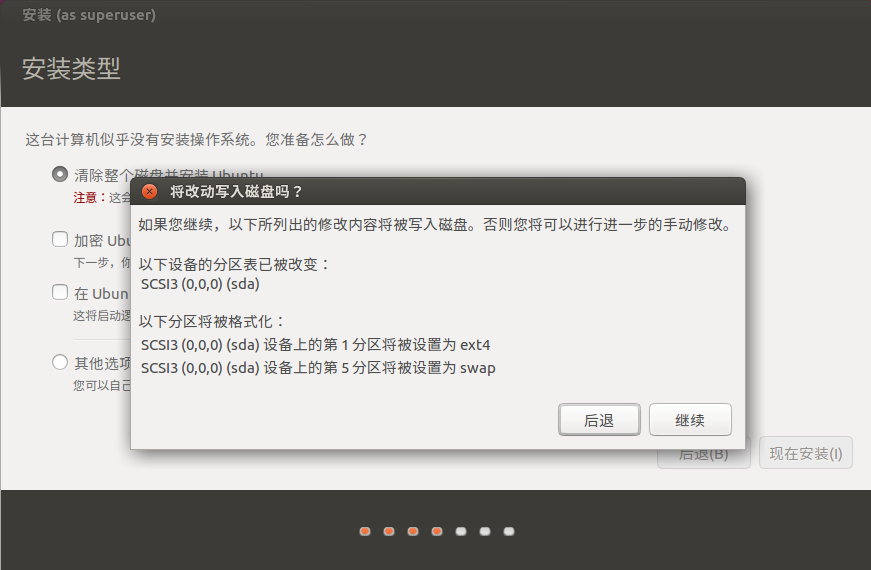

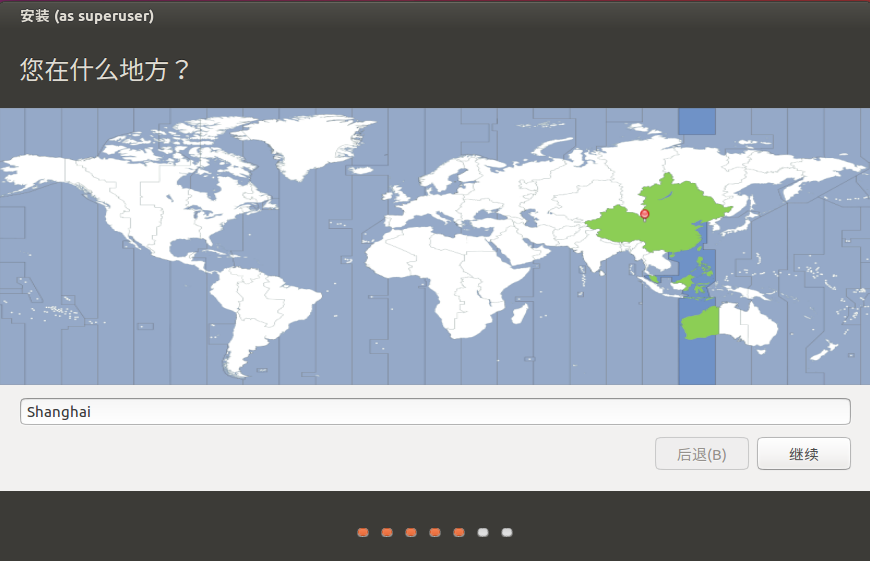

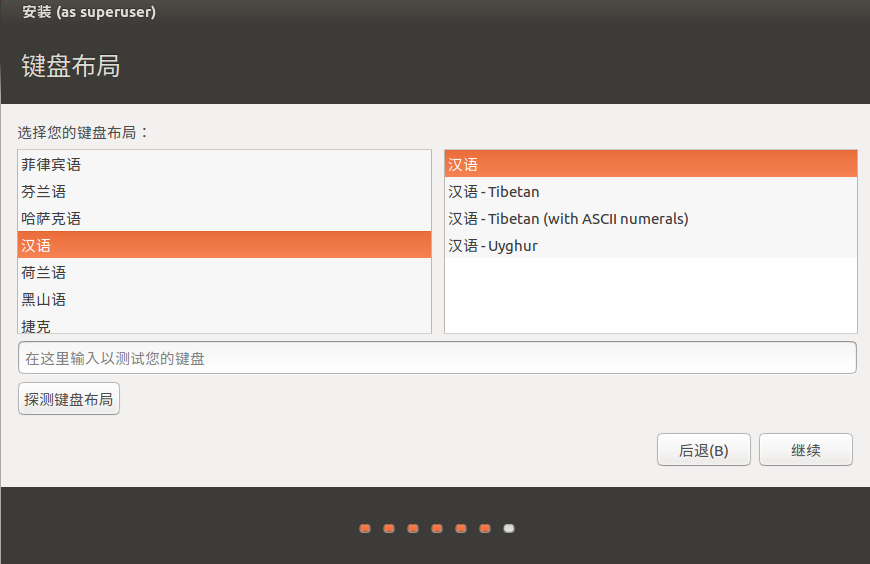

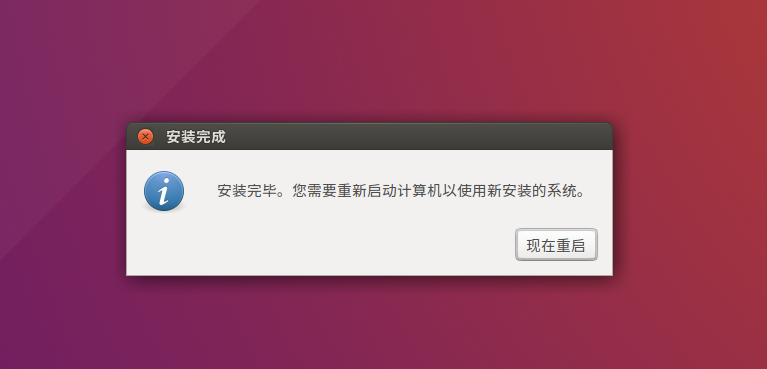

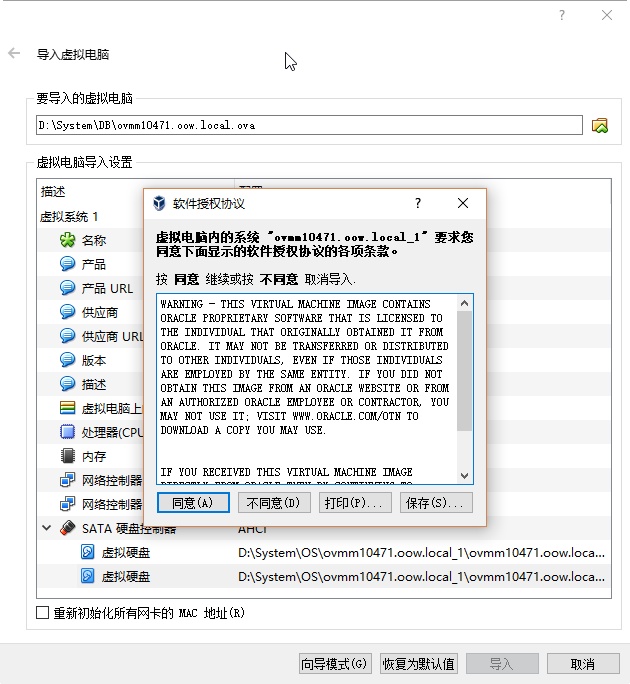

安装Linux(Ubuntu)

安装Virtualbox之后,需要在其中安装Linux服务器。我使用的Linux发行版本为:ubuntu-16.04.1-desktop-amd64。

下载地址:https://www.ubuntu.com/download

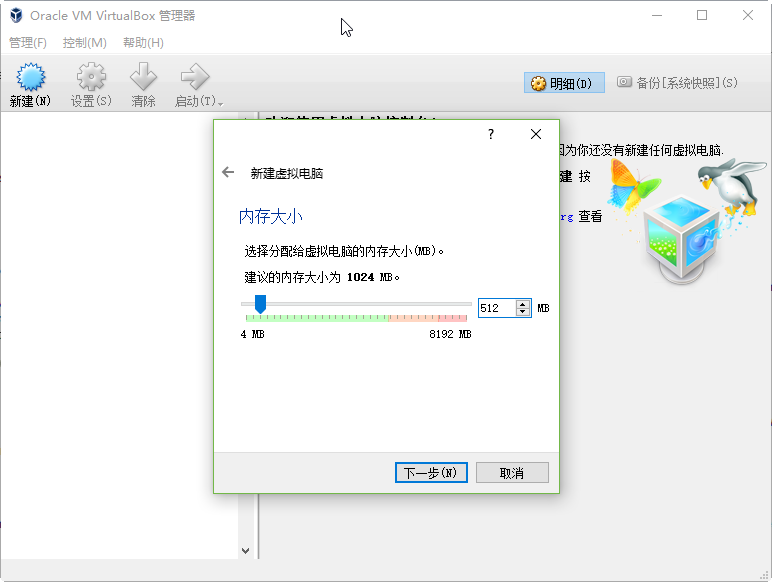

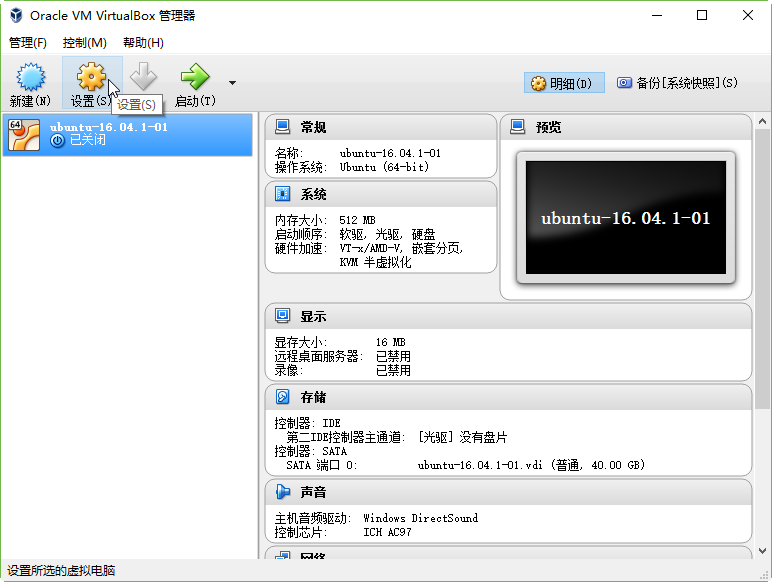

此处需要为虚拟机分配内存大小,建议内存充足的朋友多分配一些。此处我分配了512MB内存,但是之后又修改为1024MB。

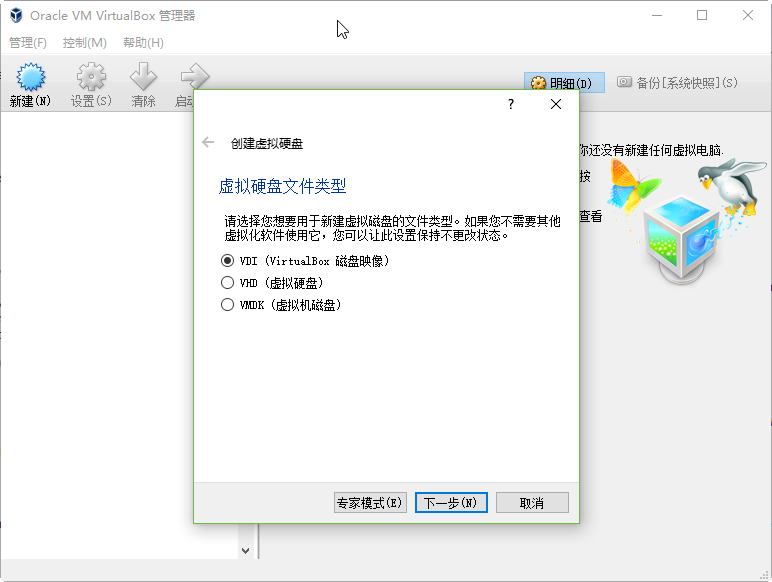

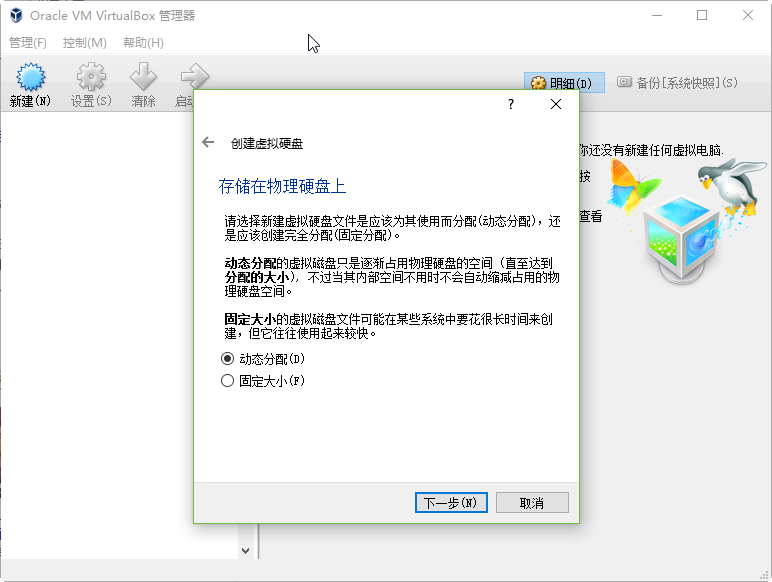

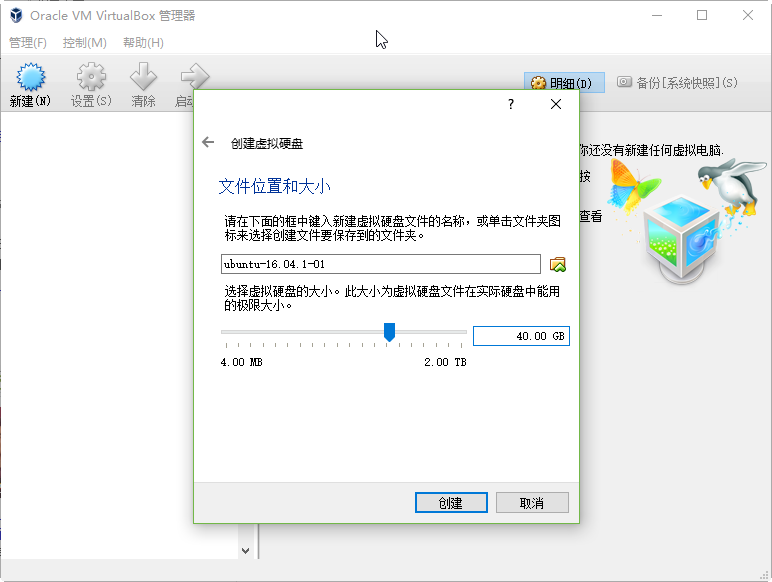

此处需要分配虚拟机的硬盘容量,请硬盘容量充足的朋友多分配一些。另外虚拟硬盘最好存储在SSD这样的高速存取设备上,提升虚拟机相应速度。

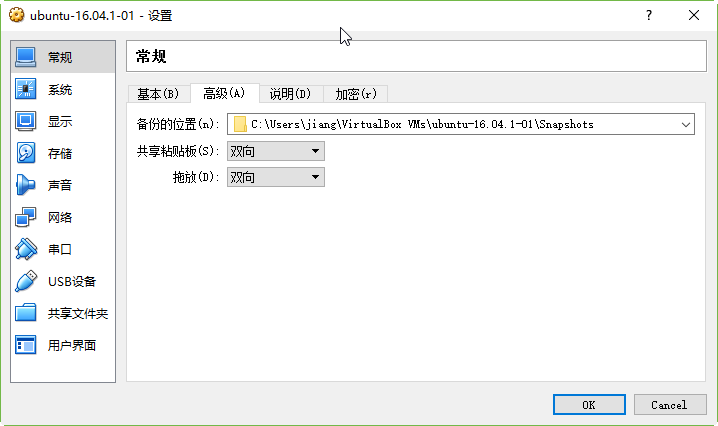

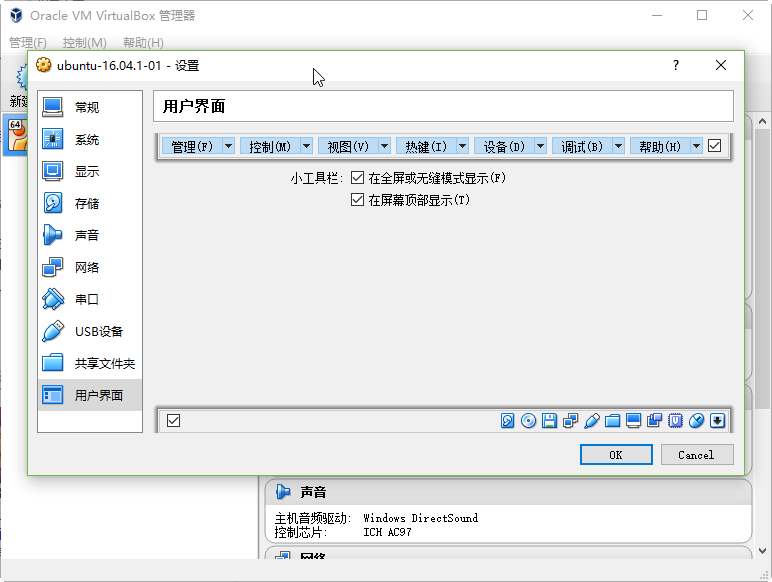

将共享剪贴板和拖放功能均选为双向:

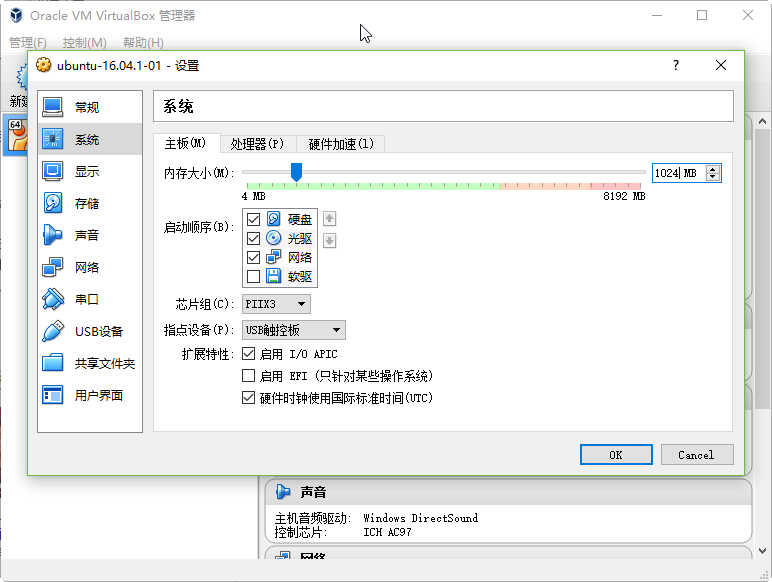

此处将虚拟机内存修改为1024MB。

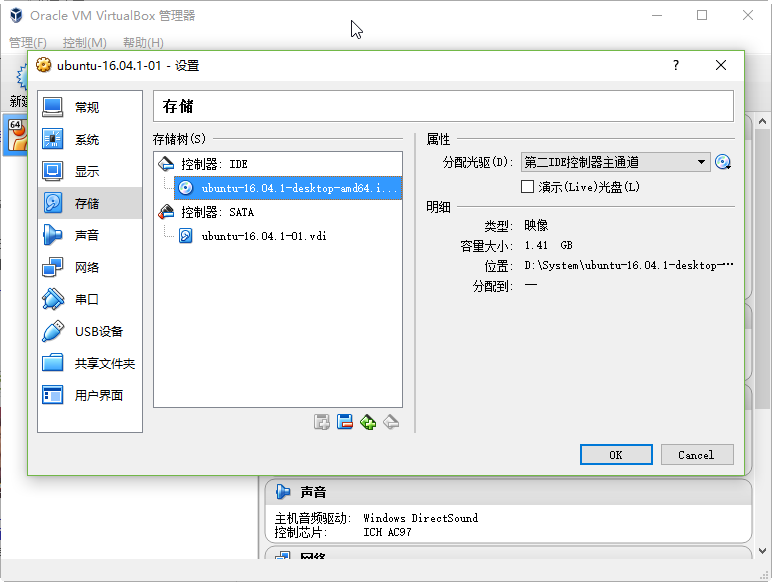

点击右侧的关盘图标,选择我们之前下载的ubuntu-16.04.1-desktop-amd64.iso

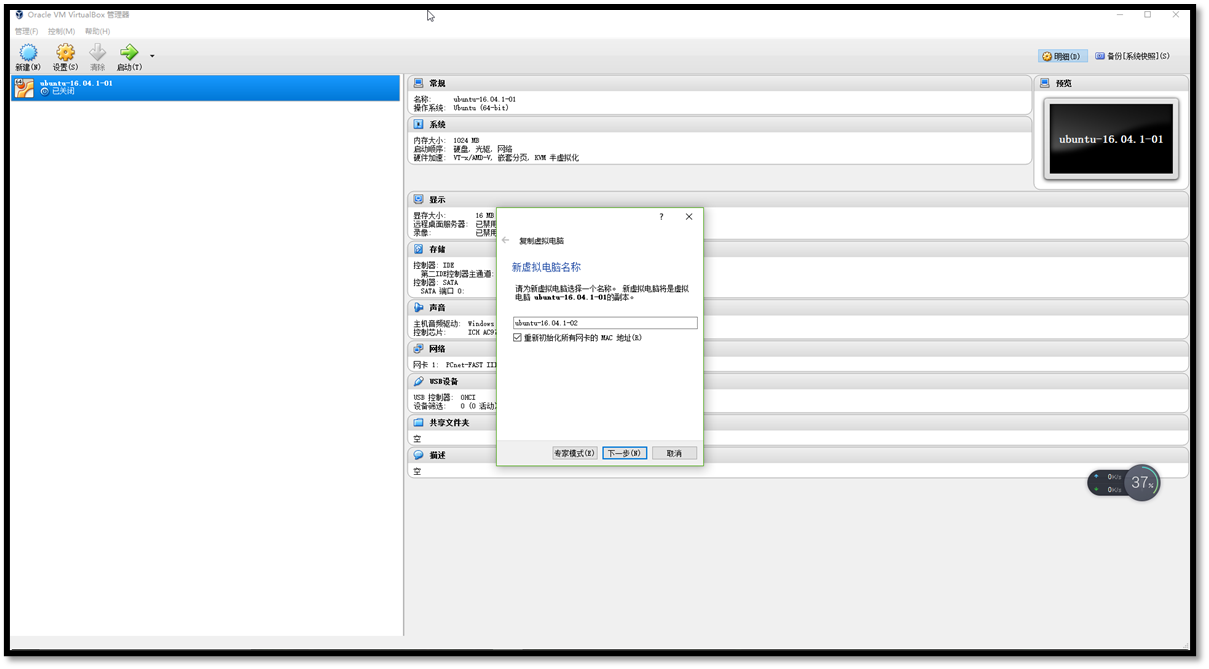

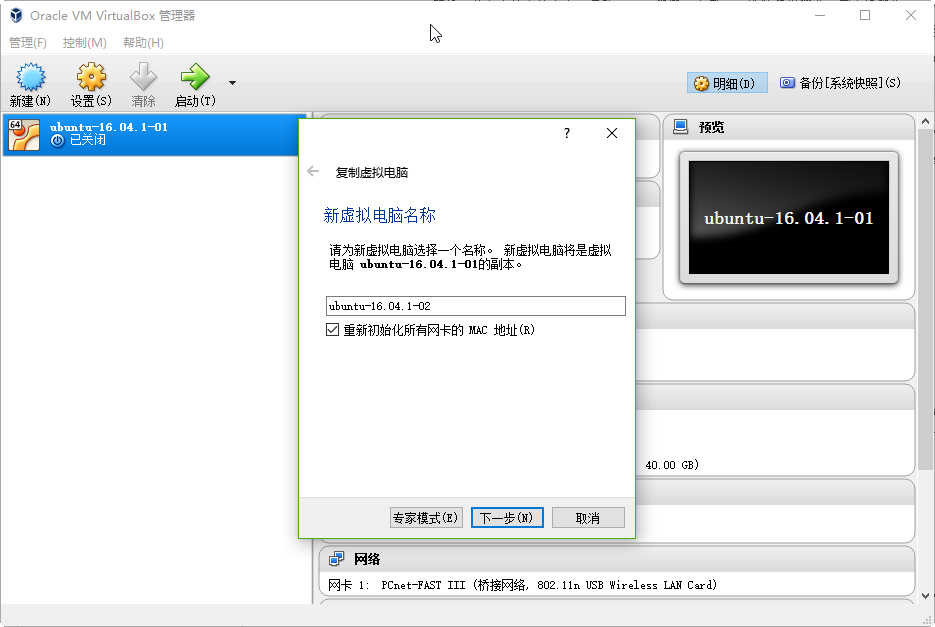

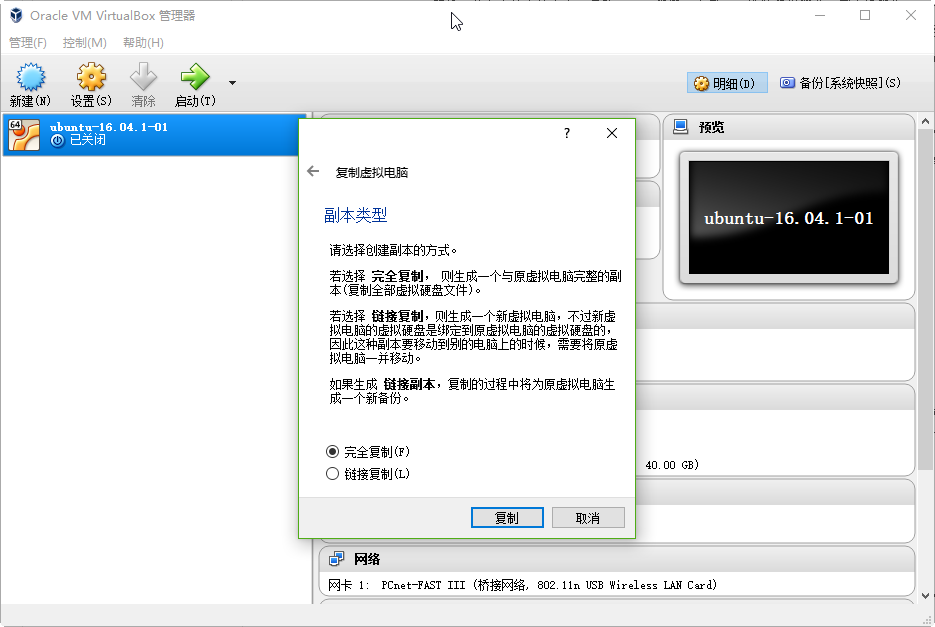

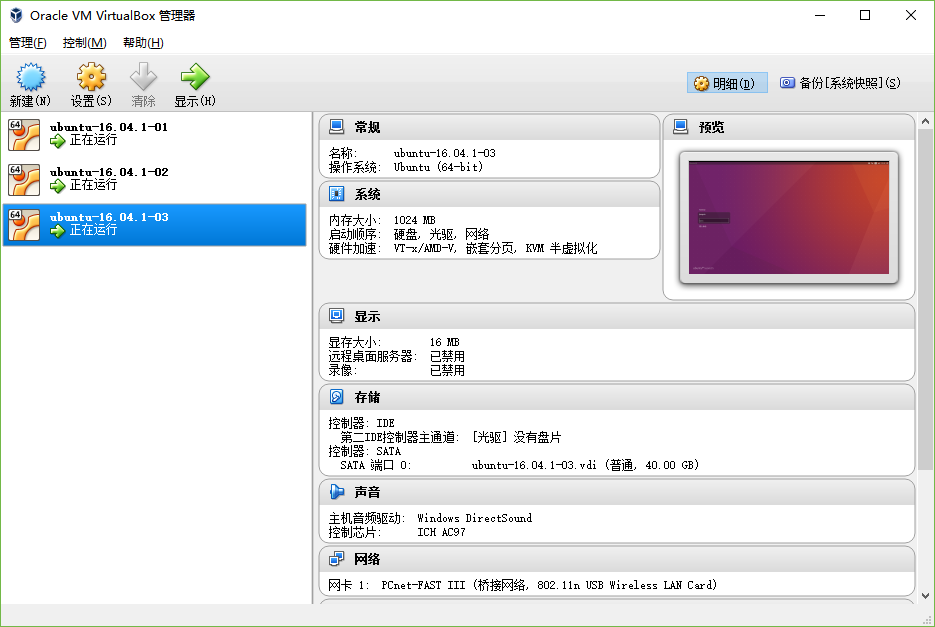

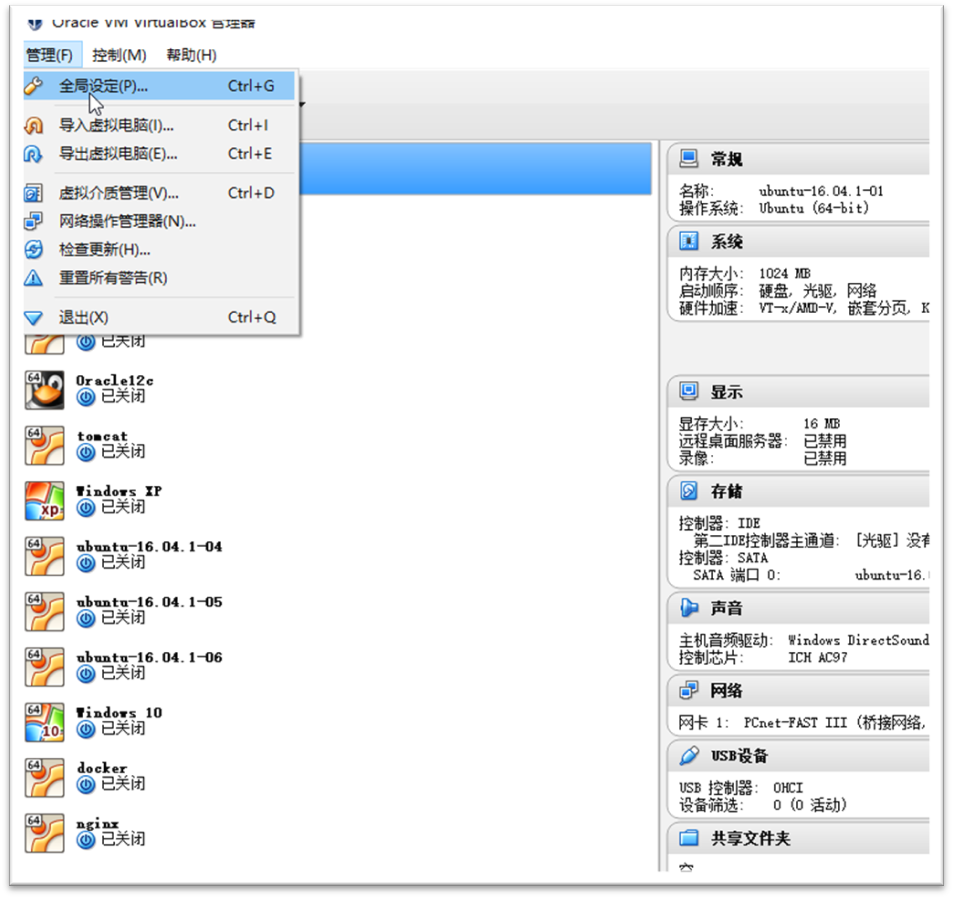

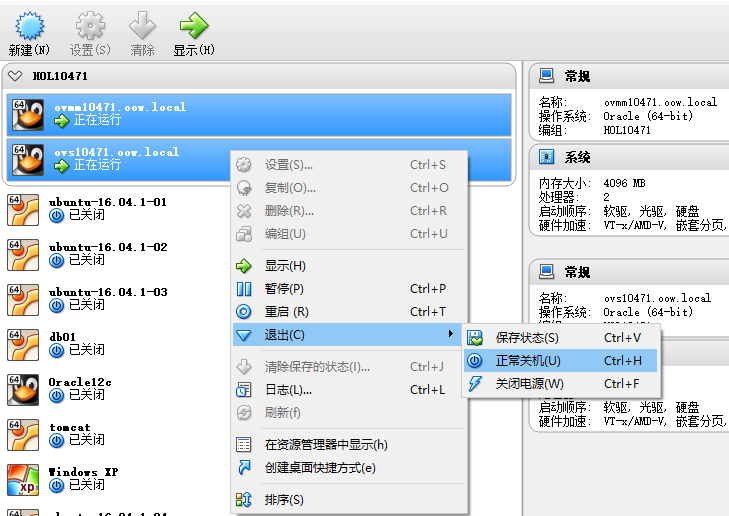

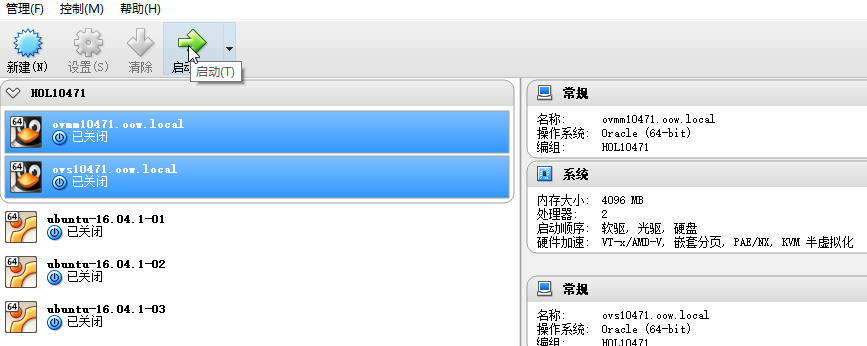

复制虚拟机

安装Ubuntu-16.04.1-01之后,复制该虚拟机,创建Ubuntu-16.04.1-02、Ubuntu-16.04.1-03

复制之后效果如下图:

配置各个虚拟机

hadoop@Ubuntu-01:~$ sudo apt-get install lrzsz

hadoop@Ubuntu-01:~$ sudo apt-get install vim

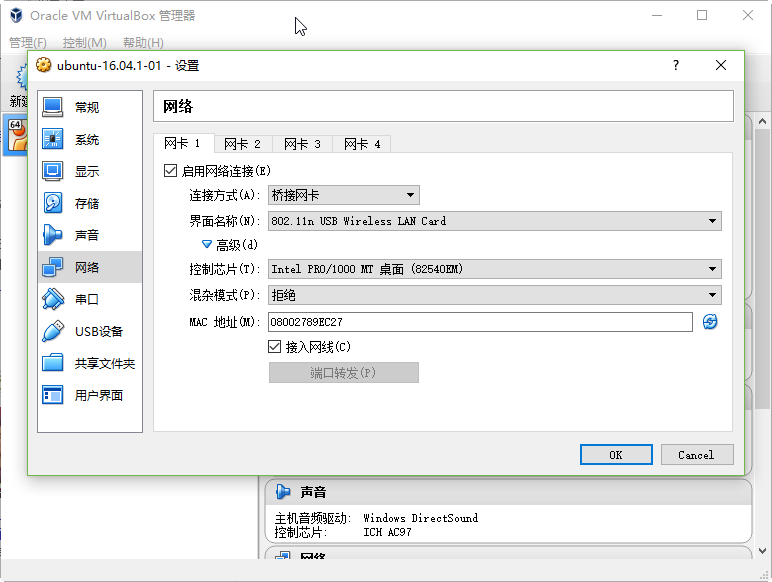

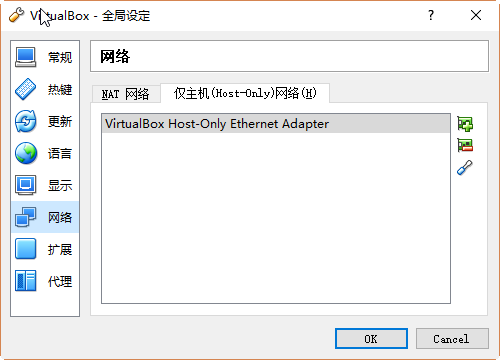

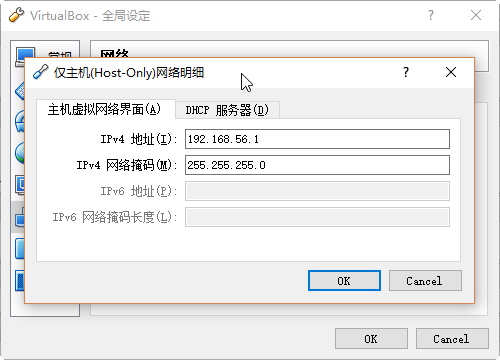

配置网络

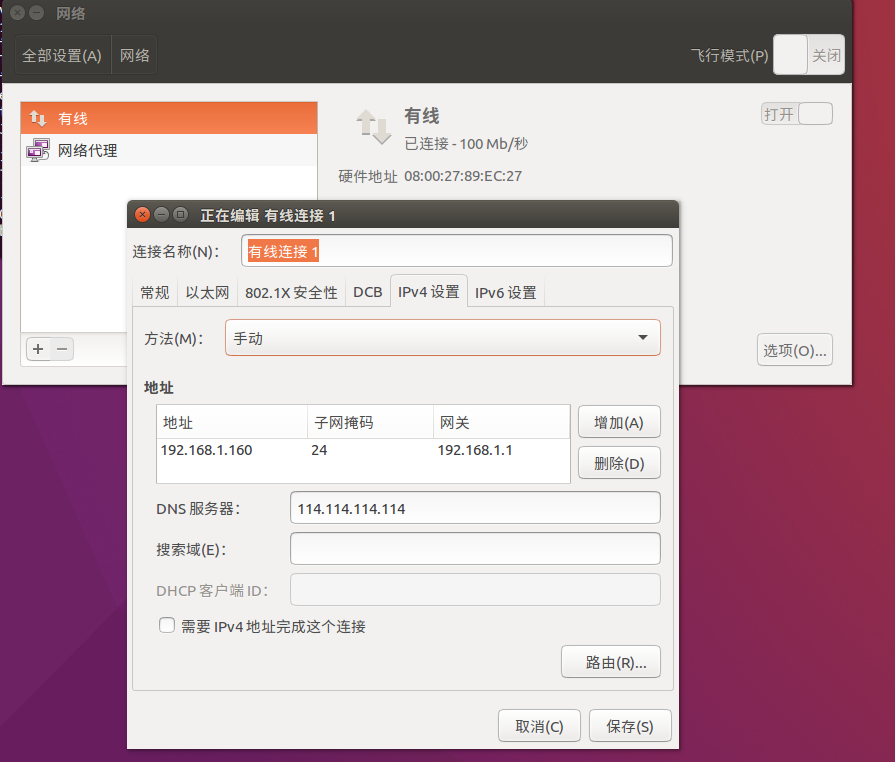

将服务器的IP分配方式改为固定IP,点击系统设置-网络-选项-IPv4设置。按照下图进行设置。注意不要忘了DNS服务器的配置,否则会导致虚拟机无法上外网。

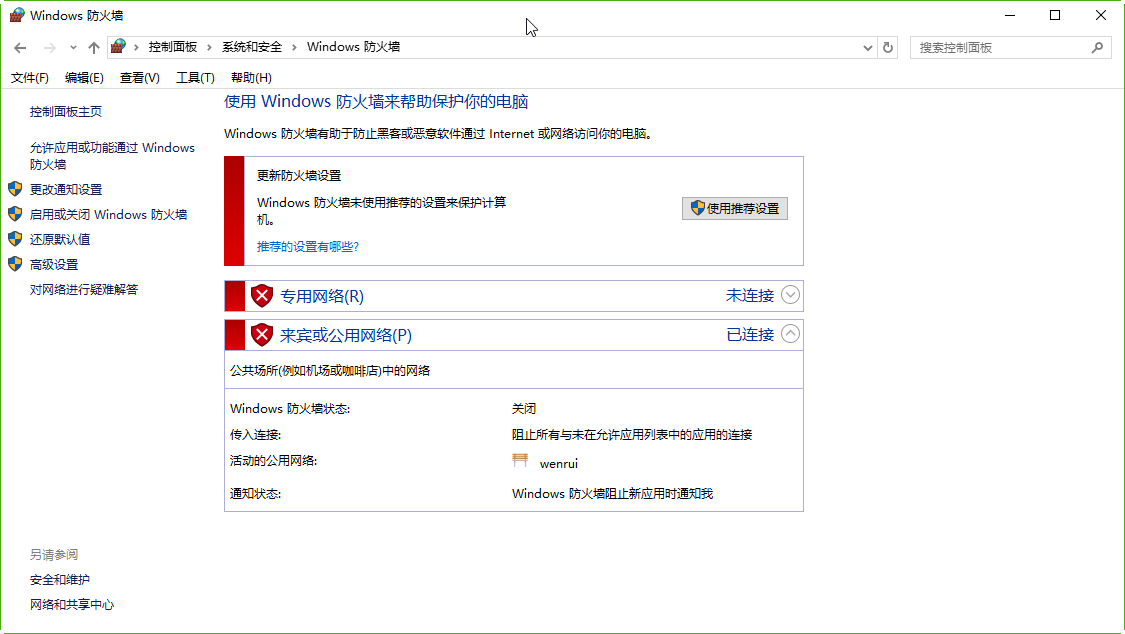

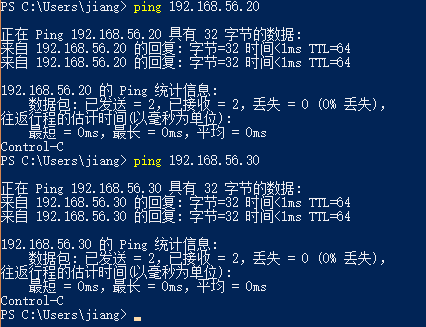

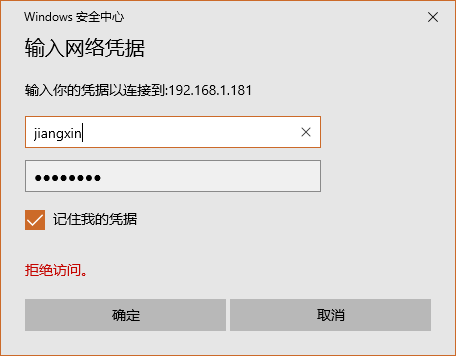

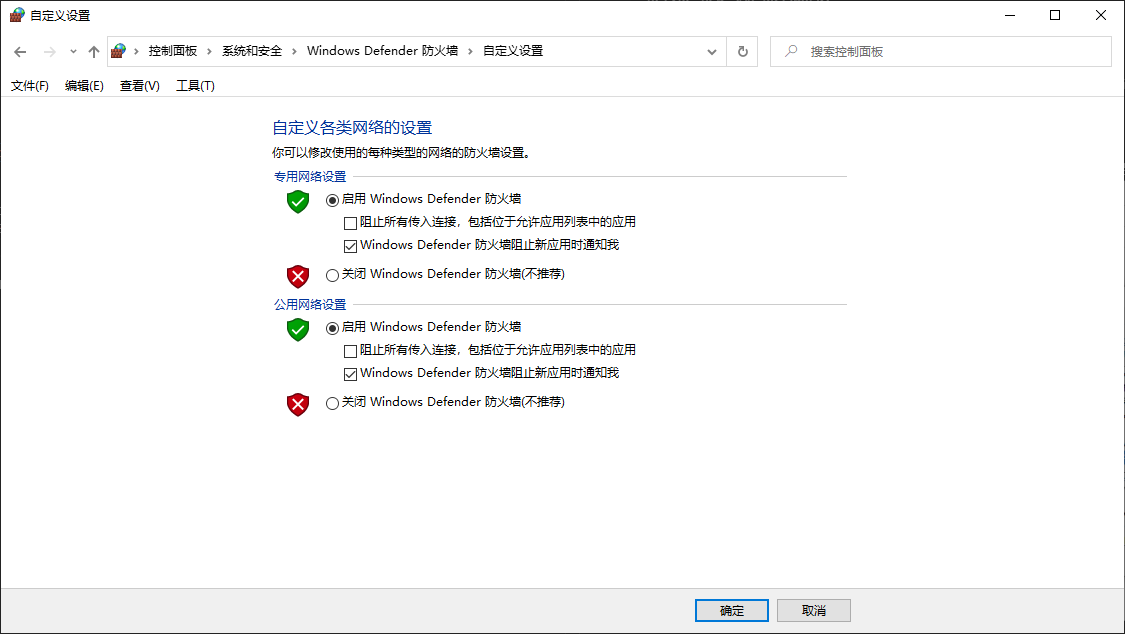

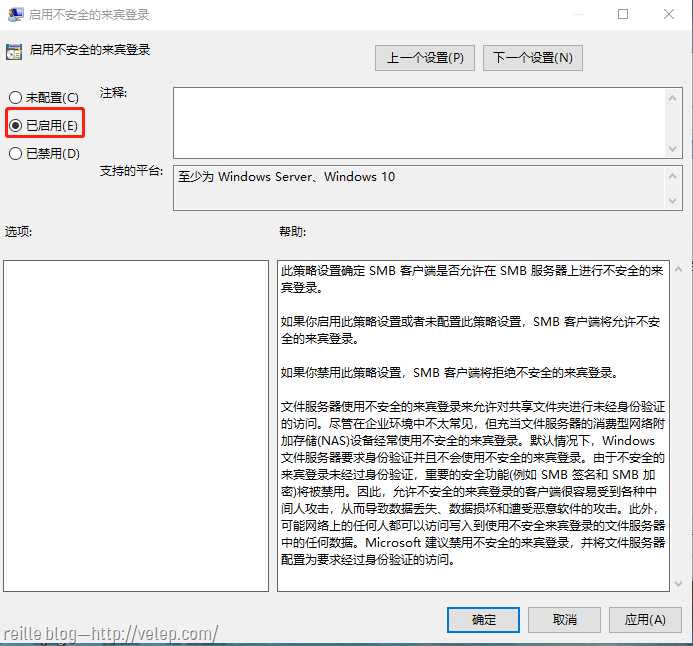

配置完网络之后,尝试主机和虚拟机互相ping,如果均能ping同则说明网络配置正常。否则需要检查防火墙等配置。此次安装过程中,主机可以ping通虚拟机,但是虚拟机无法ping通主机。关闭Windows机器防火墙后,问题解决。

配置完网络之后,尝试主机和虚拟机互相ping,如果均能ping同则说明网络配置正常。否则需要检查防火墙等配置。此次安装过程中,主机可以ping通虚拟机,但是虚拟机无法ping通主机。关闭Windows机器防火墙后,问题解决。

安装openssh-server

为了之后方便主机与虚拟机之间,以及各个虚拟机之间方便通讯,需要在虚拟机中安装openssh-server。(Ubuntu默认已经安装SSH Client)

sudo apt-get install openssh-server

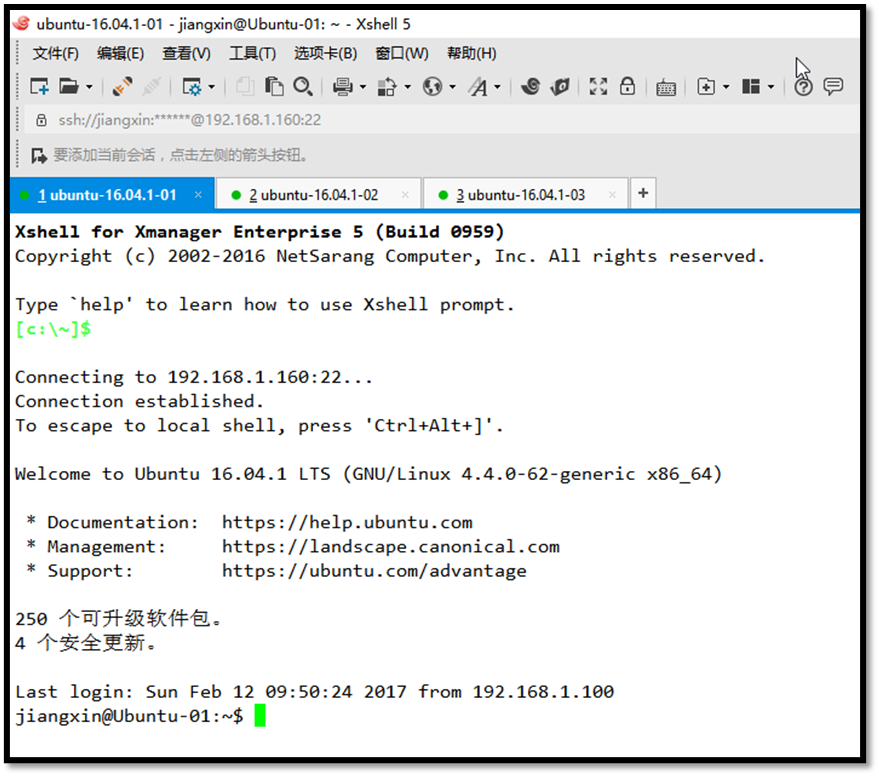

安装之后就可以在Windows主机上利用XShell登陆各个虚拟机。

开启root用户

在之后的某些操作中(比如文件传输)经常需要root用户,但是Ubuntu默认是不开启root用户的,使用下面的命令进行开启: sudo passwd root

修改主机名

复制的虚拟机的主机名均为Ubuntu-01,但为了以后集群中能够容易分辨各台服务器,需要给每台机器取个不同的名字。机器名由 /etc/hostname文件决定。 sudo vim /etc/hostname

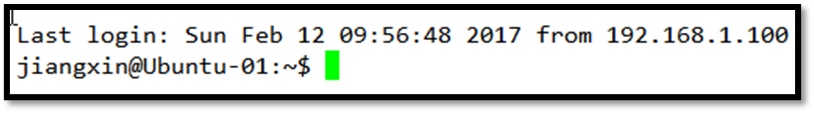

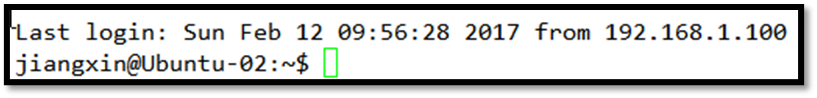

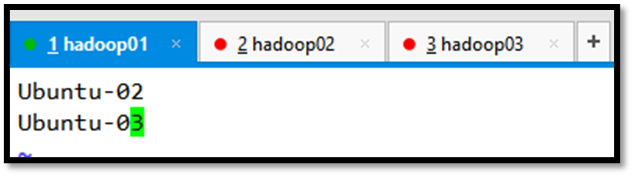

在三台虚拟机上,分别配置为: Ubuntu-01 Ubuntu-02 Ubuntu-03 重启三台虚拟机(sudo reboot),重新利用XShell进行登录,效果如下:

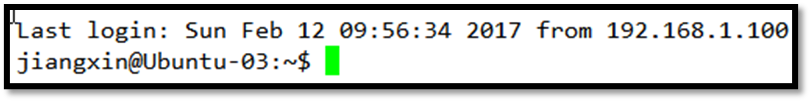

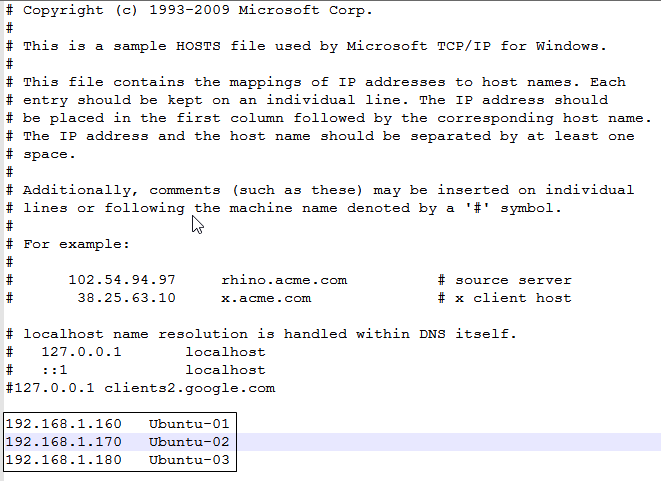

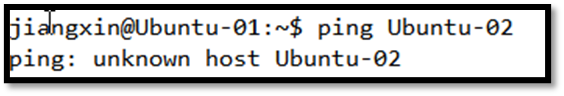

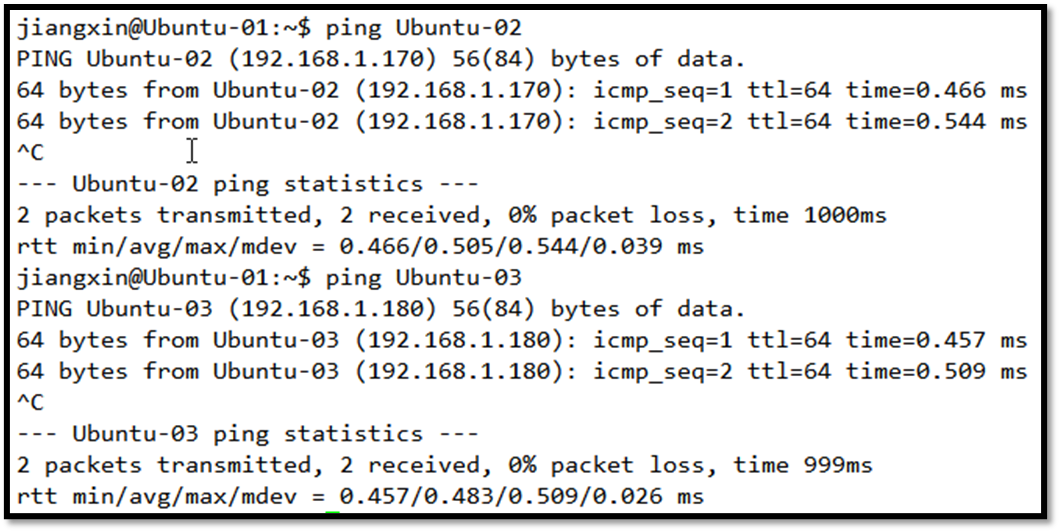

修改hosts

为了让主机与各个虚拟机以及各个虚拟机之间通讯更加方便,需要配置主机和三台虚拟机的hosts文件:

Windows主机(C:\windows\system32\drivers\etc)

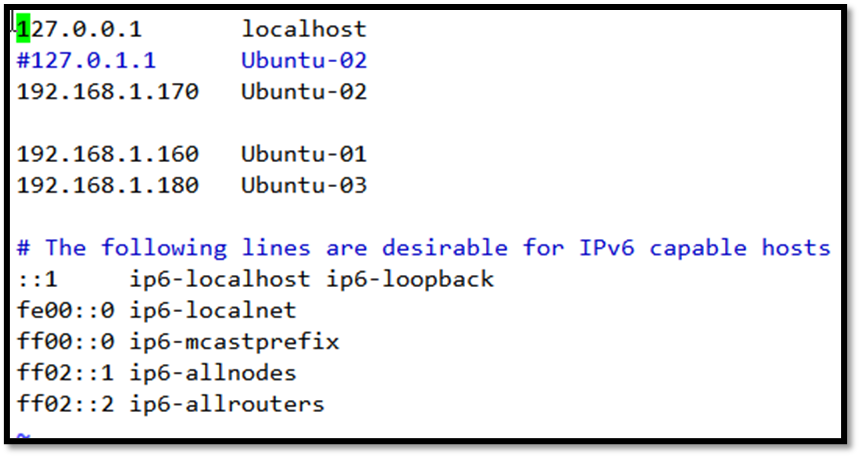

Ubuntu-01(/etc/hosts)

Ubuntu-02(/etc/hosts)

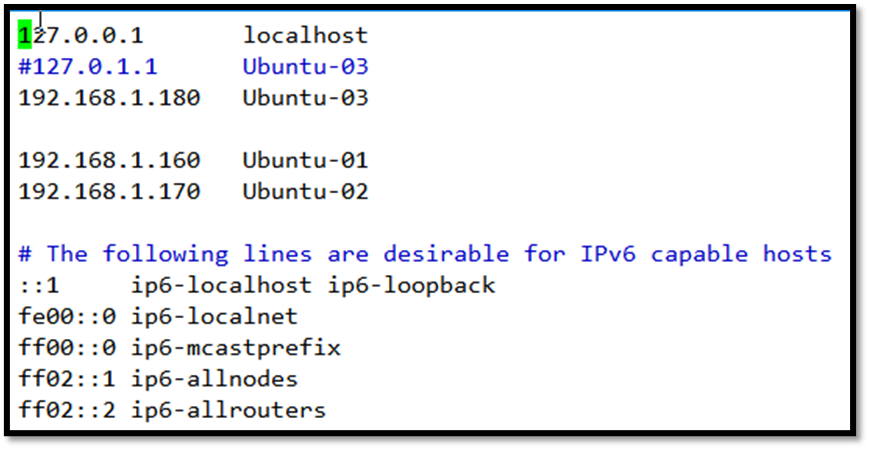

Ubuntu-03(/etc/hosts)

修改之前效果:

修改之后效果:

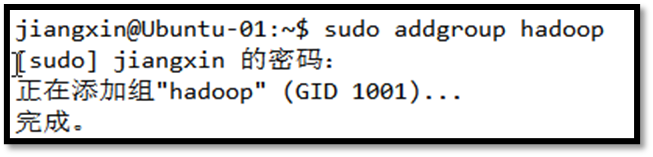

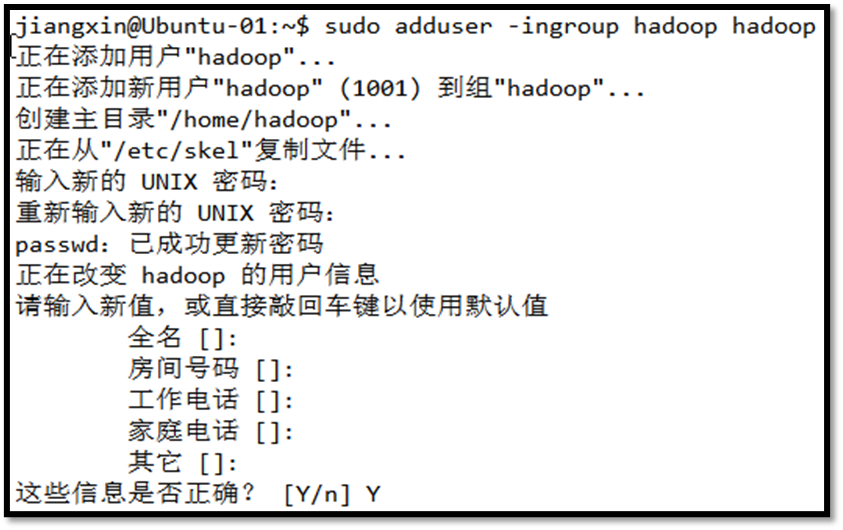

创建hadoop用户组和用户

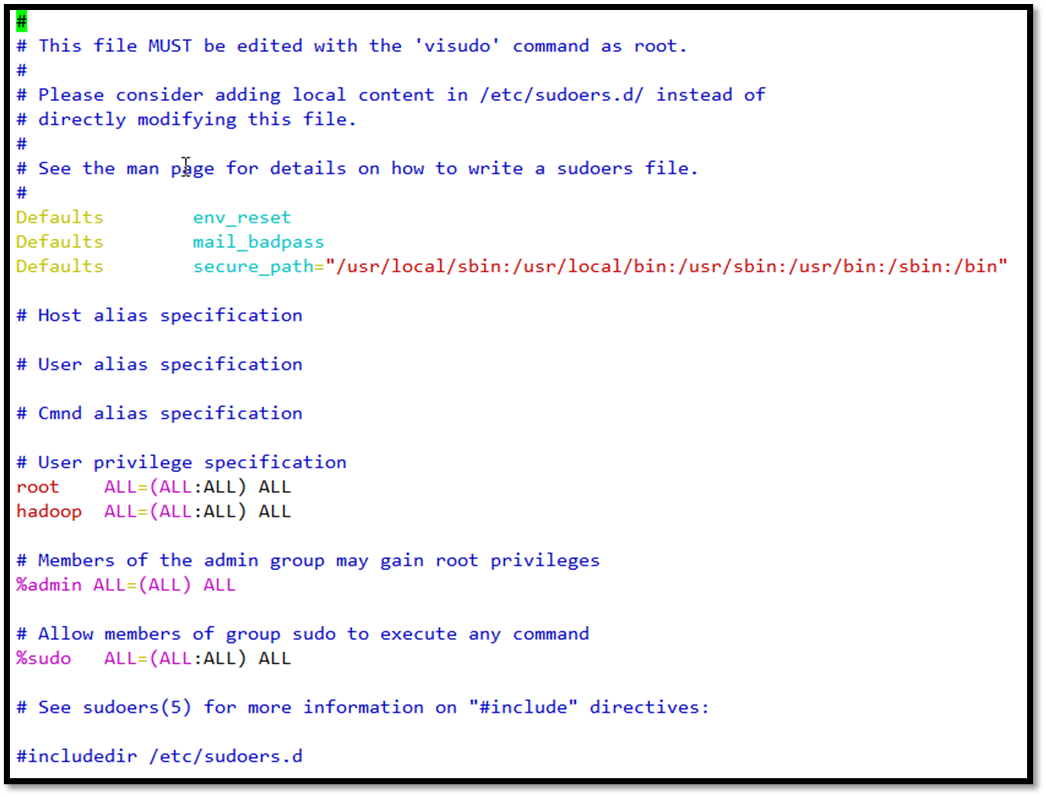

另外还需要给hadoop用户添加权限,打开/etc/sudoers文件,在root ALL=(ALL:ALL) ALL下添加hadoop ALL=(ALL:ALL) ALL,

创建hadoop用户之后,su到hadoop用户,进行后续操作。本文之后的所有操作,如无特殊说明均是指在hadoop用户下。

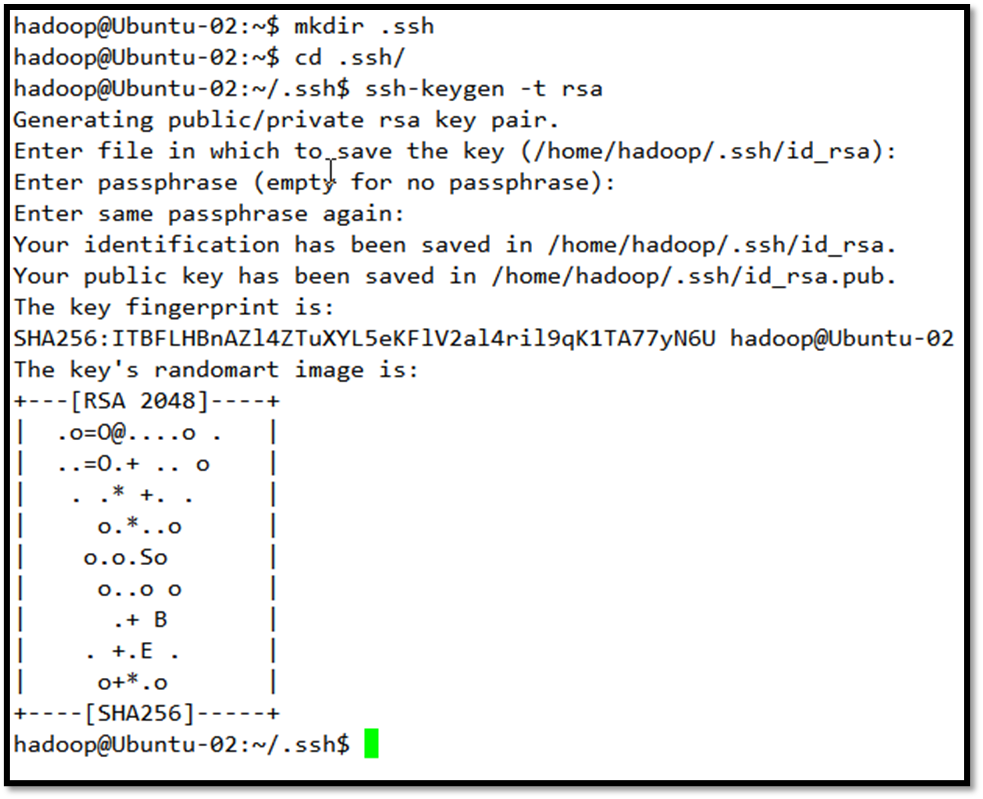

配置ssh免登陆

为了完成分布式计算系统,需要三台机器相互之间可以无密码访问(或者是master可以无密码访问2个slave)。

在个人目录/home/hadoop下新建.ssh文件夹,在.ssh中执行

ssh-keygen -t rsa

系统会问你一些配置,由于是初次实验,不需要这些内容,点回车继续下去即可。完成后会在.ssh/下生成id_rsa和id_rsa.pub两个文件,三台机器做同样处理。

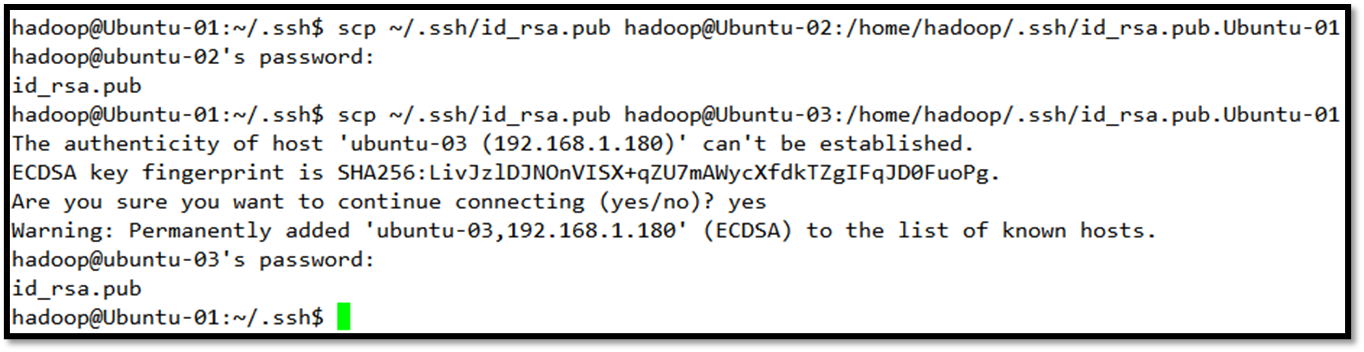

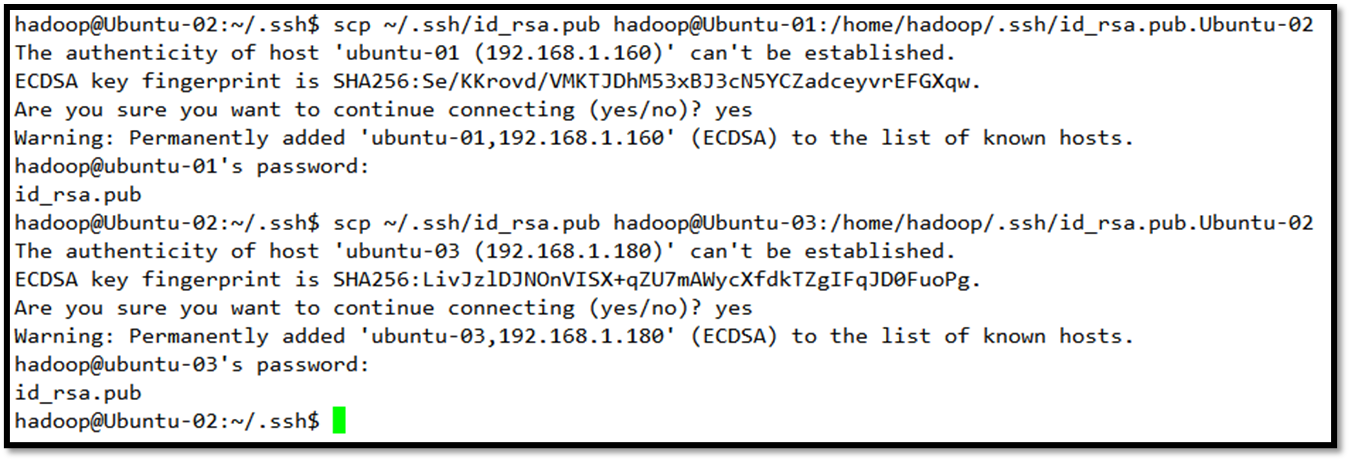

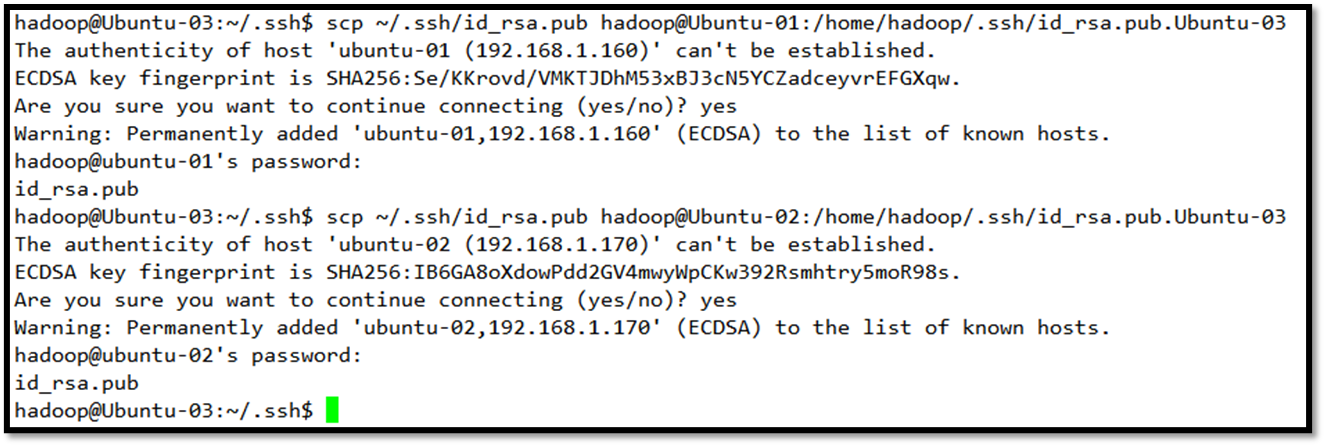

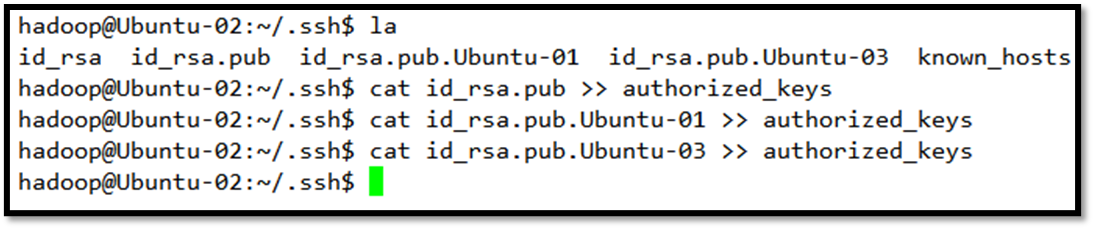

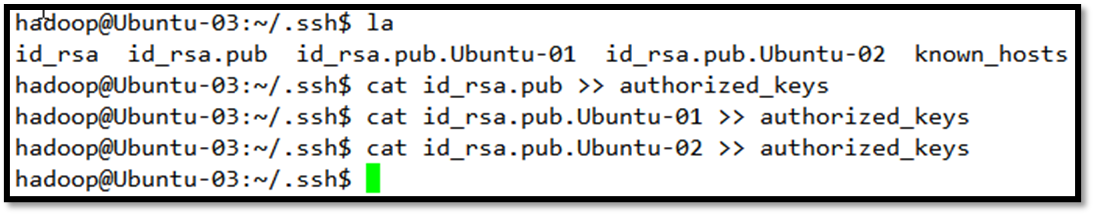

接下来把它们的密钥相互交换,这样做的目的是把Ubuntu-01的密钥交给Ubuntu-02和Ubuntu-03,对Ubuntu-02和Ubuntu-03做同样处理,完成后每一个机器的/.ssh/中应该有3个密钥,一个是自己的,另两个是别人的。

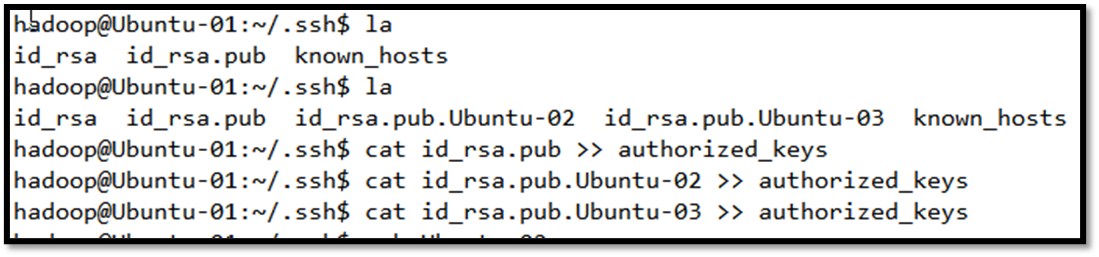

把自己的密钥连同别人的两个密钥加到授权密钥中

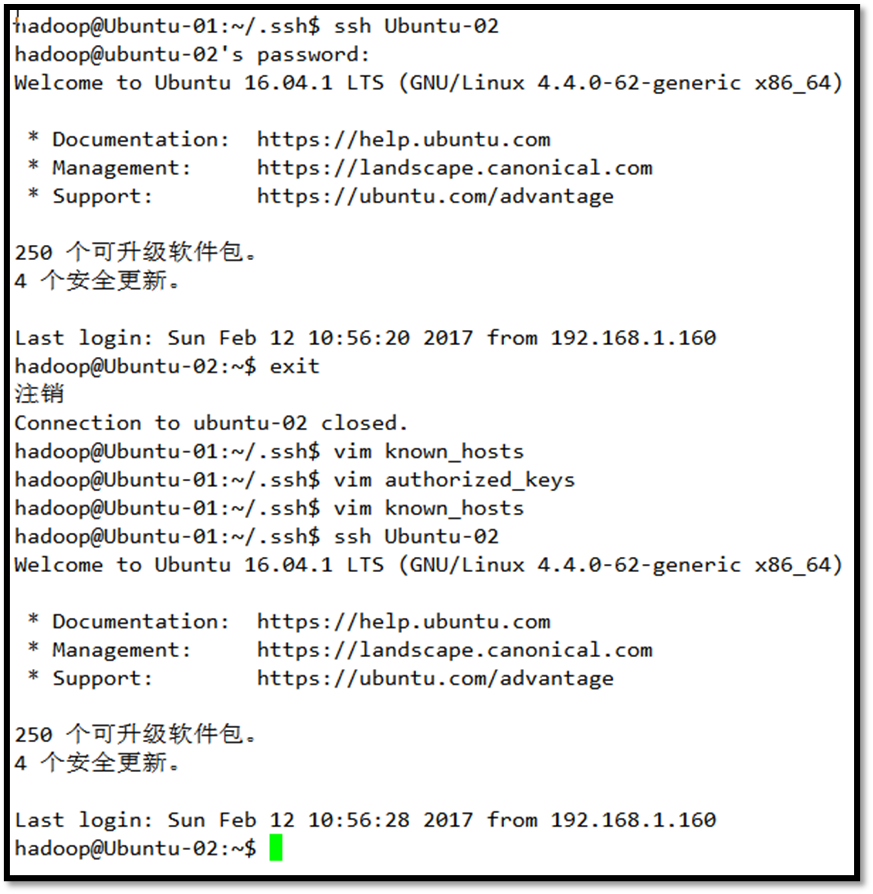

接下来检验相互之间是否可以通过ssh实现无密码访问

访问成功会显示欢迎信息,初次访问需要yes,之后就可以不直接访问了。

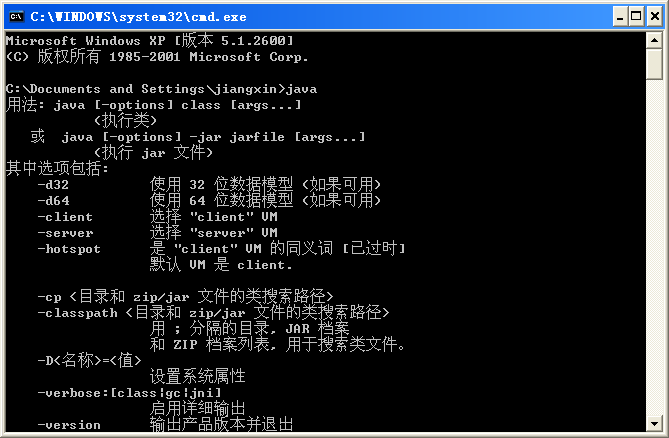

安装JDK

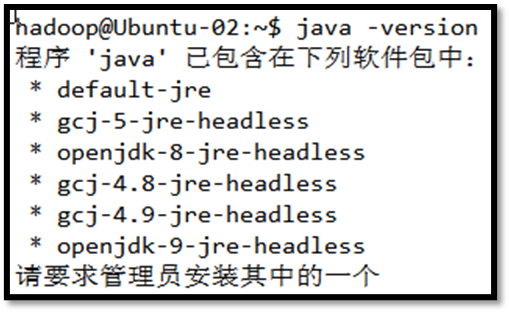

首先利用java -version命令,检查系统中有没有openjdk,如果有的话利用下面的命令删除:

sudo apt-get purge openjdk*

在此次安装的权限系统中没有发现openjdk。

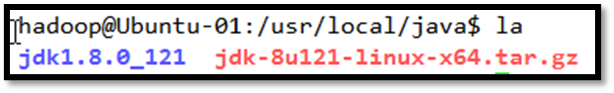

此次安装的jdk版本为jdk-8u121-linux-x64。 下载地址为:http://www.oracle.com

hadoop@Ubuntu-01:~$ cd /usr/local

hadoop@Ubuntu-01:/usr/local$ sudo mkdir java

hadoop@Ubuntu-01:/usr/local$ sudo chown hadoop:hadoop java/

上传jdk-8u121-linux-x64.tar.gz到java目录

hadoop@Ubuntu-01:/usr/local$ cd java/

hadoop@Ubuntu-01:/usr/local/java$ tar -zxvf jdk-8u121-linux-x64.tar.gz

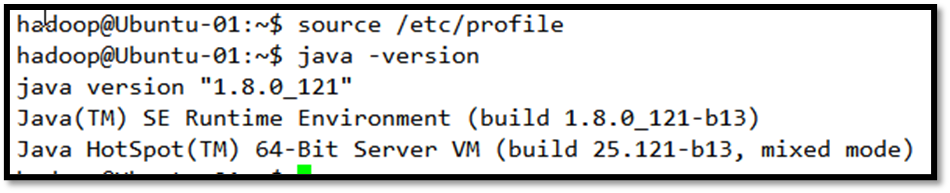

sudo vim /etc/profile

在文件末尾添加如下内容:

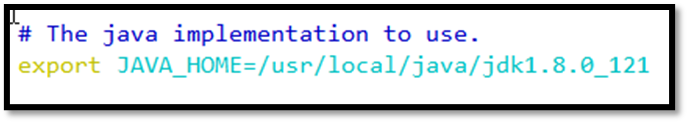

export JAVA_HOME=/usr/local/java/jdk1.8.0_121

export CLASSPATH=.:$JAVA_HOME/lib:$CLASSPATH

export PATH=$JAVA_HOME/bin:$PATH

然后检查是否安装成功:

安装Hadoop

cd /usr/local

hadoop@Ubuntu-01:/usr/local$ sudo mkdir hadoop

hadoop@Ubuntu-01:/usr/local$ sudo chown hadoop:hadoop hadoop/

hadoop@Ubuntu-01:/usr/local$ cd hadoop/

hadoop@Ubuntu-01:/usr/local/hadoop$ tar -xzvf hadoop-2.7.3.tar.gz

hadoop@Ubuntu-01:/usr/local/hadoop$ rm hadoop-2.7.3.tar.gz

hadoop@Ubuntu-01:/usr/local/hadoop$ sudo vim /etc/profile

export HADOOP_HOME=/usr/local/hadoop/hadoop-2.7.3

export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

hadoop@Ubuntu-01:/usr/local/hadoop$ source /etc/profile

修改Hadoop配置文件

Hadoop配置文件均在/usr/local/hadoop/hadoop-2.7.3/etc/hadoop 下

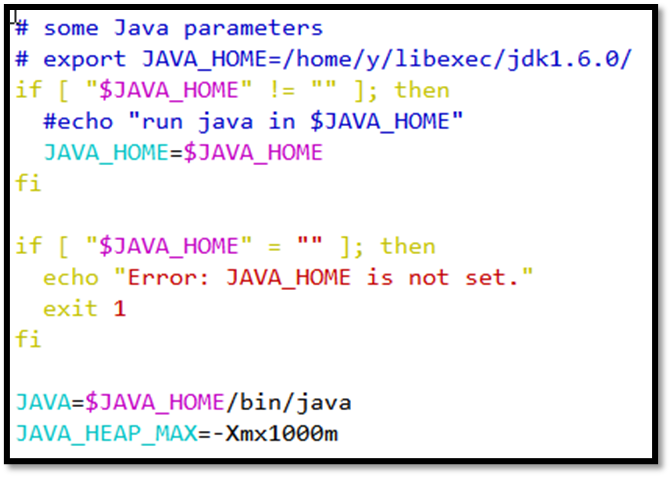

hadoop-env.sh

虽然已经在/etc/profile中设置了JAVA_HOME环境变量,但是此处仍然要进行配置。

yarn-env.sh

由于已经设置了JAVA_HOME环境变量,此处可以不设置

slaves

core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://Ubuntu-01:9000</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/usr/local/hadoop/hadoop-2.7.3/tmp</value>

<description>Abasefor other temporary directories.</description>

</property>

<property>

<name>hadoop.proxyuser.spark.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.spark.groups</name>

<value>*</value>

</property>

</configuration>

hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>Ubuntu-01:9001</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/hadoop/hadoop-2.7.3/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/local/hadoop/hadoop-2.7.3/tmp</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

</configuration>

mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>Ubuntu-01:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>Ubuntu-01:19888</value>

</property>

</configuration>

yarn-site.xml

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>Ubuntu-01:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>Ubuntu-01:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>Ubuntu-01:8035</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>Ubuntu-01:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>Ubuntu-01:8088</value>

</property>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>192.168.0.160</value>

</property>

</configuration>

将配置好的hadoop文件copy到另一台slave机器上

hadoop@Ubuntu-01:/usr/local/hadoop$ scp -r hadoop-2.7.3/ hadoop@Ubuntu-02:/usr/local/hadoop/

hadoop@Ubuntu-01:/usr/local/hadoop$ scp -r hadoop-2.7.3/ hadoop@Ubuntu-03:/usr/local/hadoop/

验证

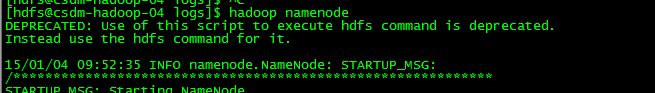

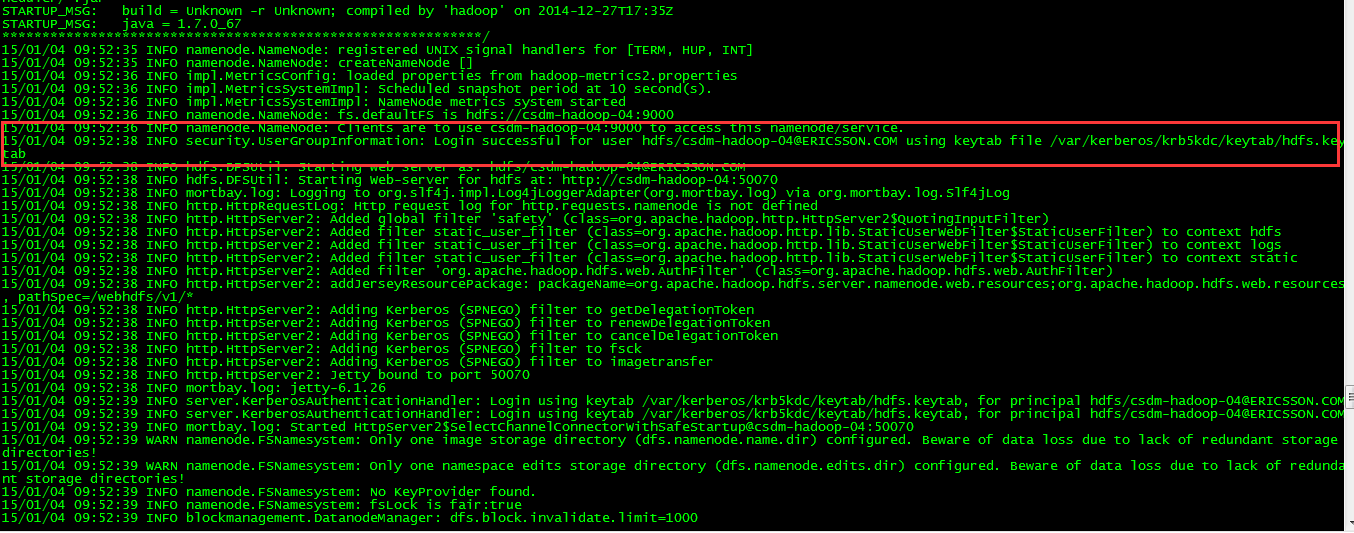

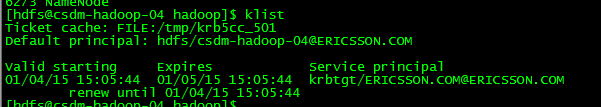

格式化namenode

hadoop@Ubuntu-01:/usr/local/hadoop/hadoop-2.7.3/etc/hadoop$ hdfs namenode -format

17/02/12 14:00:27 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = Ubuntu-01/127.0.1.1

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.7.3

STARTUP_MSG: classpath = /usr/local/hadoop/hadoop-2.7.3/etc/hadoop:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/jettison-1.1.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/gson-2.2.4.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/commons-digester-1.8.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/commons-configuration-1.6.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/jets3t-0.9.0.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/servlet-api-2.5.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/httpclient-4.2.5.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/hamcrest-core-1.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/jersey-core-1.9.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/activation-1.1.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/commons-httpclient-3.1.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/jetty-6.1.26.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/hadoop-auth-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/commons-logging-1.1.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/commons-codec-1.4.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/jersey-server-1.9.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/curator-recipes-2.7.1.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/jsch-0.1.42.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/mockito-all-1.8.5.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/slf4j-api-1.7.10.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/commons-collections-3.2.2.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/stax-api-1.0-2.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/jsr305-3.0.0.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/httpcore-4.2.5.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/commons-math3-3.1.1.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/htrace-core-3.1.0-incubating.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/commons-io-2.4.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/commons-net-3.1.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/curator-framework-2.7.1.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/commons-lang-2.6.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/junit-4.11.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/xz-1.0.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/guava-11.0.2.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/asm-3.2.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/avro-1.7.4.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/jersey-json-1.9.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/zookeeper-3.4.6.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/xmlenc-0.52.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/paranamer-2.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/curator-client-2.7.1.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/hadoop-annotations-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/hadoop-common-2.7.3-tests.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/hadoop-nfs-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/hadoop-common-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/lib/netty-all-4.0.23.Final.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/lib/jsr305-3.0.0.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/lib/htrace-core-3.1.0-incubating.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/lib/commons-io-2.4.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/lib/guava-11.0.2.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/lib/asm-3.2.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/hadoop-hdfs-nfs-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/hadoop-hdfs-2.7.3-tests.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/hdfs/hadoop-hdfs-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/jettison-1.1.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/servlet-api-2.5.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/guice-3.0.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/jersey-core-1.9.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/activation-1.1.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/jetty-6.1.26.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/commons-codec-1.4.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/jersey-server-1.9.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/log4j-1.2.17.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/commons-collections-3.2.2.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/jsr305-3.0.0.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/commons-io-2.4.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/commons-lang-2.6.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/xz-1.0.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/guava-11.0.2.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/asm-3.2.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/jersey-json-1.9.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/jersey-client-1.9.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/zookeeper-3.4.6-tests.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/commons-cli-1.2.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/hadoop-yarn-registry-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/hadoop-yarn-server-sharedcachemanager-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/hadoop-yarn-client-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/hadoop-yarn-api-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/hadoop-yarn-server-tests-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/hadoop-yarn-common-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/hadoop-yarn-server-common-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/lib/guice-3.0.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/lib/junit-4.11.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/lib/xz-1.0.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/lib/asm-3.2.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/lib/javax.inject-1.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/lib/hadoop-annotations-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.7.3-tests.jar:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar:/usr/local/hadoop/hadoop-2.7.3/contrib/capacity-scheduler/*.jar

STARTUP_MSG: build = https://git-wip-us.apache.org/repos/asf/hadoop.git -r baa91f7c6bc9cb92be5982de4719c1c8af91ccff; compiled by 'root' on 2016-08-18T01:41Z

STARTUP_MSG: java = 1.8.0_121

************************************************************/

17/02/12 14:00:27 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

17/02/12 14:00:27 INFO namenode.NameNode: createNameNode [-format]

Formatting using clusterid: CID-7e34c743-19b0-4ebb-b922-37e8657a8090

17/02/12 14:00:27 INFO namenode.FSNamesystem: No KeyProvider found.

17/02/12 14:00:27 INFO namenode.FSNamesystem: fsLock is fair:true

17/02/12 14:00:27 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000

17/02/12 14:00:27 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

17/02/12 14:00:27 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000

17/02/12 14:00:27 INFO blockmanagement.BlockManager: The block deletion will start around 2017 二月 12 14:00:27

17/02/12 14:00:27 INFO util.GSet: Computing capacity for map BlocksMap

17/02/12 14:00:27 INFO util.GSet: VM type = 64-bit

17/02/12 14:00:28 INFO util.GSet: 2.0% max memory 966.7 MB = 19.3 MB

17/02/12 14:00:28 INFO util.GSet: capacity = 2^21 = 2097152 entries

17/02/12 14:00:28 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false

17/02/12 14:00:28 INFO blockmanagement.BlockManager: defaultReplication = 3

17/02/12 14:00:28 INFO blockmanagement.BlockManager: maxReplication = 512

17/02/12 14:00:28 INFO blockmanagement.BlockManager: minReplication = 1

17/02/12 14:00:28 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

17/02/12 14:00:28 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000

17/02/12 14:00:28 INFO blockmanagement.BlockManager: encryptDataTransfer = false

17/02/12 14:00:28 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

17/02/12 14:00:28 INFO namenode.FSNamesystem: fsOwner = hadoop (auth:SIMPLE)

17/02/12 14:00:28 INFO namenode.FSNamesystem: supergroup = supergroup

17/02/12 14:00:28 INFO namenode.FSNamesystem: isPermissionEnabled = true

17/02/12 14:00:28 INFO namenode.FSNamesystem: HA Enabled: false

17/02/12 14:00:28 INFO namenode.FSNamesystem: Append Enabled: true

17/02/12 14:00:28 INFO util.GSet: Computing capacity for map INodeMap

17/02/12 14:00:28 INFO util.GSet: VM type = 64-bit

17/02/12 14:00:28 INFO util.GSet: 1.0% max memory 966.7 MB = 9.7 MB

17/02/12 14:00:28 INFO util.GSet: capacity = 2^20 = 1048576 entries

17/02/12 14:00:28 INFO namenode.FSDirectory: ACLs enabled? false

17/02/12 14:00:28 INFO namenode.FSDirectory: XAttrs enabled? true

17/02/12 14:00:28 INFO namenode.FSDirectory: Maximum size of an xattr: 16384

17/02/12 14:00:28 INFO namenode.NameNode: Caching file names occuring more than 10 times

17/02/12 14:00:28 INFO util.GSet: Computing capacity for map cachedBlocks

17/02/12 14:00:28 INFO util.GSet: VM type = 64-bit

17/02/12 14:00:28 INFO util.GSet: 0.25% max memory 966.7 MB = 2.4 MB

17/02/12 14:00:28 INFO util.GSet: capacity = 2^18 = 262144 entries

17/02/12 14:00:28 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

17/02/12 14:00:28 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0

17/02/12 14:00:28 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000

17/02/12 14:00:28 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10

17/02/12 14:00:28 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10

17/02/12 14:00:28 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25

17/02/12 14:00:28 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

17/02/12 14:00:28 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

17/02/12 14:00:28 INFO util.GSet: Computing capacity for map NameNodeRetryCache

17/02/12 14:00:28 INFO util.GSet: VM type = 64-bit

17/02/12 14:00:28 INFO util.GSet: 0.029999999329447746% max memory 966.7 MB = 297.0 KB

17/02/12 14:00:28 INFO util.GSet: capacity = 2^15 = 32768 entries

Re-format filesystem in Storage Directory /usr/local/hadoop/hadoop-2.7.3/name ? (Y or N) Y

17/02/12 14:00:33 INFO namenode.FSImage: Allocated new BlockPoolId: BP-1871053033-127.0.1.1-1486879233735

17/02/12 14:00:33 INFO common.Storage: Storage directory /usr/local/hadoop/hadoop-2.7.3/name has been successfully formatted.

17/02/12 14:00:33 INFO namenode.FSImageFormatProtobuf: Saving image file /usr/local/hadoop/hadoop-2.7.3/name/current/fsimage.ckpt_0000000000000000000 using no compression

17/02/12 14:00:33 INFO namenode.FSImageFormatProtobuf: Image file /usr/local/hadoop/hadoop-2.7.3/name/current/fsimage.ckpt_0000000000000000000 of size 353 bytes saved in 0 seconds.

17/02/12 14:00:33 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

17/02/12 14:00:33 INFO util.ExitUtil: Exiting with status 0

17/02/12 14:00:33 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at Ubuntu-01/127.0.1.1

************************************************************/

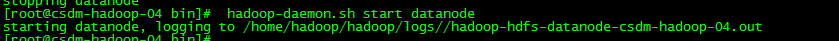

启动hdfs

hadoop@Ubuntu-01:/usr/local/hadoop/hadoop-2.7.3/etc/hadoop$ start-dfs.sh

Starting namenodes on [Ubuntu-01]

Ubuntu-01: starting namenode, logging to /usr/local/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-namenode-Ubuntu-01.out

Ubuntu-03: starting datanode, logging to /usr/local/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-datanode-Ubuntu-03.out

Ubuntu-02: starting datanode, logging to /usr/local/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-datanode-Ubuntu-02.out

Starting secondary namenodes [Ubuntu-01]

Ubuntu-01: starting secondarynamenode, logging to /usr/local/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-secondarynamenode-Ubuntu-01.out

hadoop@Ubuntu-01:/usr/local/hadoop/hadoop-2.7.3/etc/hadoop$ jps

9466 Jps

9147 NameNode

9357 SecondaryNameNode

停止hdfs

hadoop@Ubuntu-01:/usr/local/hadoop/hadoop-2.7.3/etc/hadoop$ stop-dfs.sh

Stopping namenodes on [Ubuntu-01]

Ubuntu-01: stopping namenode

Ubuntu-02: stopping datanode

Ubuntu-03: stopping datanode

Stopping secondary namenodes [Ubuntu-01]

Ubuntu-01: stopping secondarynamenode

hadoop@Ubuntu-01:/usr/local/hadoop/hadoop-2.7.3/etc/hadoop$ jps

9814 Jps

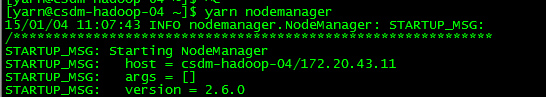

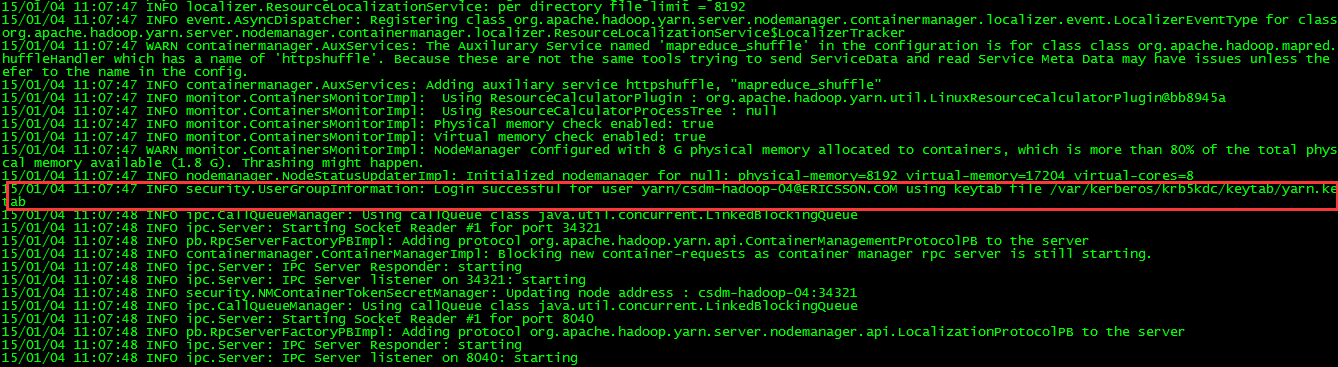

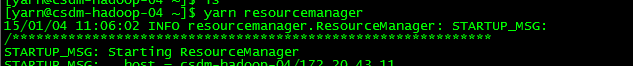

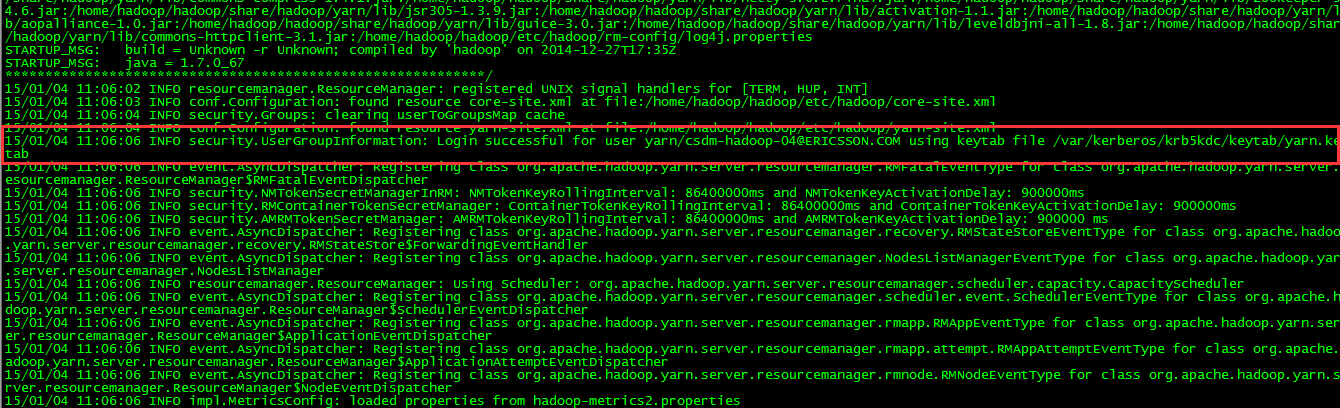

启动yarn

hadoop@Ubuntu-01:~$ start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /usr/local/hadoop/hadoop-2.7.3/logs/yarn-hadoop-resourcemanager-Ubuntu-01.out

Ubuntu-02: starting nodemanager, logging to /usr/local/hadoop/hadoop-2.7.3/logs/yarn-hadoop-nodemanager-Ubuntu-02.out

Ubuntu-03: starting nodemanager, logging to /usr/local/hadoop/hadoop-2.7.3/logs/yarn-hadoop-nodemanager-Ubuntu-03.out

hadoop@Ubuntu-01:~$ jps

6252 ResourceManager

6509 Jps

停止yarn

hadoop@Ubuntu-01:~$ stop-yarn.sh

stopping yarn daemons

stopping resourcemanager

Ubuntu-03: stopping nodemanager

Ubuntu-02: stopping nodemanager

Ubuntu-03: nodemanager did not stop gracefully after 5 seconds: killing with kill -9

Ubuntu-02: nodemanager did not stop gracefully after 5 seconds: killing with kill -9

no proxyserver to stop

查看集群状态

hadoop@Ubuntu-01:/usr/local/hadoop/hadoop-2.7.3/etc/hadoop$ hdfs dfsadmin -report

report: Call From Ubuntu-01/127.0.1.1 to Ubuntu-01:9000 failed on connection exception: java.net.ConnectException: 拒绝连接; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

hadoop@Ubuntu-01:/usr/local/hadoop/hadoop-2.7.3/etc/hadoop$ start-dfs.sh

Starting namenodes on [Ubuntu-01]

Ubuntu-01: starting namenode, logging to /usr/local/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-namenode-Ubuntu-01.out

Ubuntu-03: starting datanode, logging to /usr/local/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-datanode-Ubuntu-03.out

Ubuntu-02: starting datanode, logging to /usr/local/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-datanode-Ubuntu-02.out

Starting secondary namenodes [Ubuntu-01]

Ubuntu-01: starting secondarynamenode, logging to /usr/local/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-secondarynamenode-Ubuntu-01.out

hadoop@Ubuntu-01:~$ hdfs dfsadmin -report

Configured Capacity: 82167201792 (76.52 GB)

Present Capacity: 67444781056 (62.81 GB)

DFS Remaining: 67444297728 (62.81 GB)

DFS Used: 483328 (472 KB)

DFS Used%: 0.00%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

Missing blocks (with replication factor 1): 0

-------------------------------------------------

Live datanodes (2):

Name: 192.168.1.180:50010 (Ubuntu-03)

Hostname: Ubuntu-03

Decommission Status : Normal

Configured Capacity: 41083600896 (38.26 GB)

DFS Used: 241664 (236 KB)

Non DFS Used: 7351656448 (6.85 GB)

DFS Remaining: 33731702784 (31.42 GB)

DFS Used%: 0.00%

DFS Remaining%: 82.11%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Sun Feb 12 23:18:24 CST 2017

Name: 192.168.1.170:50010 (Ubuntu-02)

Hostname: Ubuntu-02

Decommission Status : Normal

Configured Capacity: 41083600896 (38.26 GB)

DFS Used: 241664 (236 KB)

Non DFS Used: 7370764288 (6.86 GB)

DFS Remaining: 33712594944 (31.40 GB)

DFS Used%: 0.00%

DFS Remaining%: 82.06%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Sun Feb 12 23:18:24 CST 2017

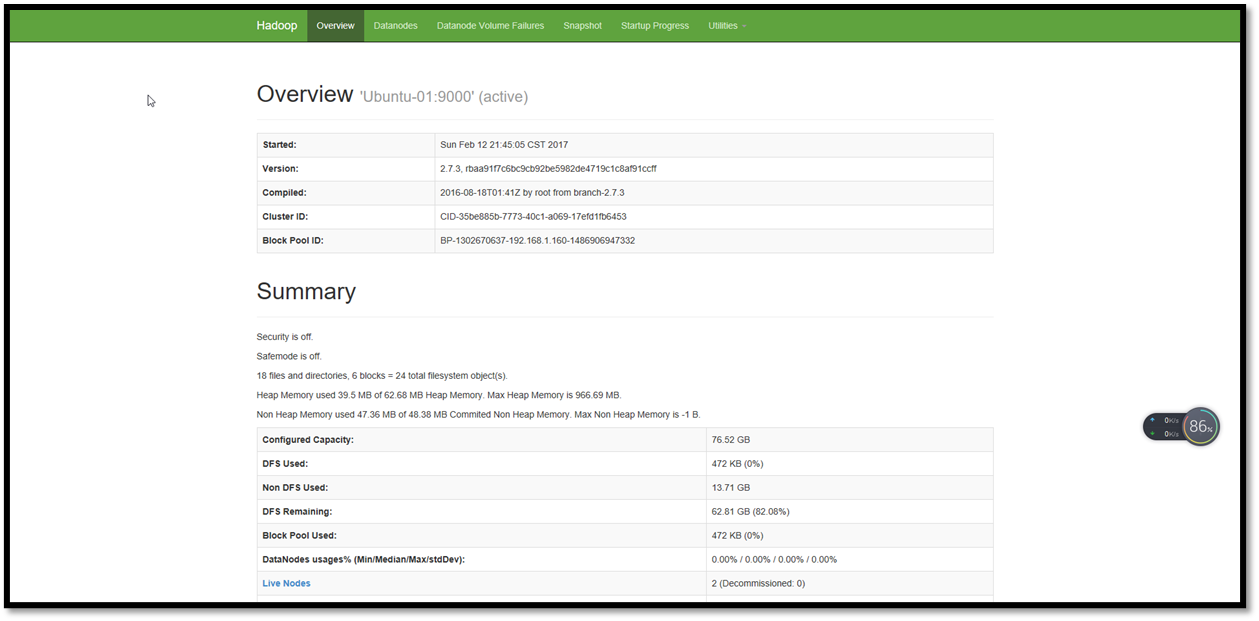

查看HDFS前台

http://192.168.1.160:50070/

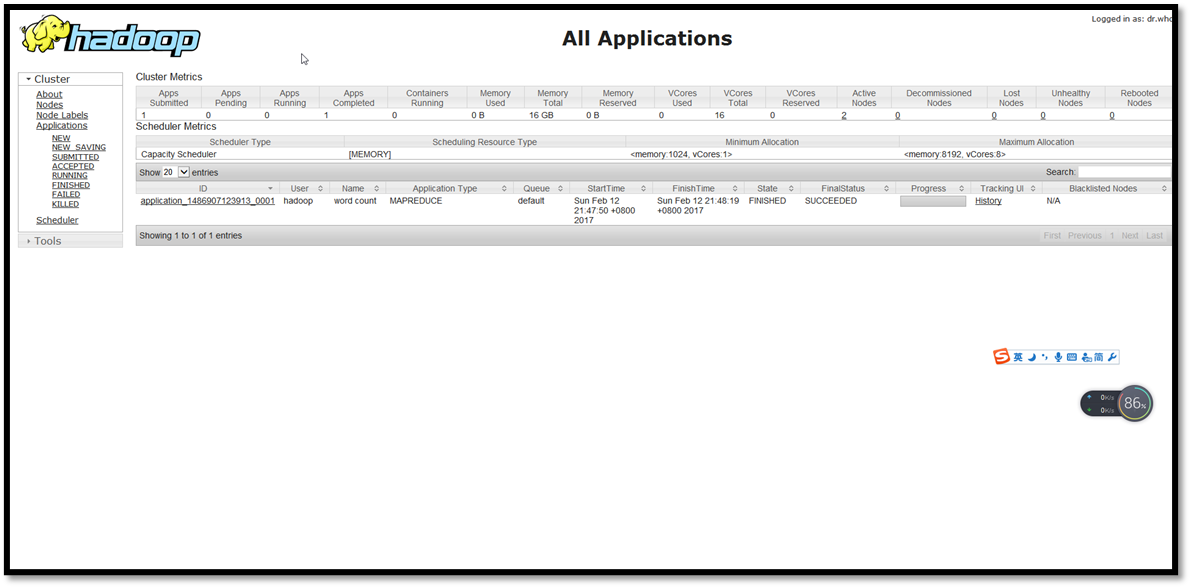

查看RM

http://192.168.1.160:8088/

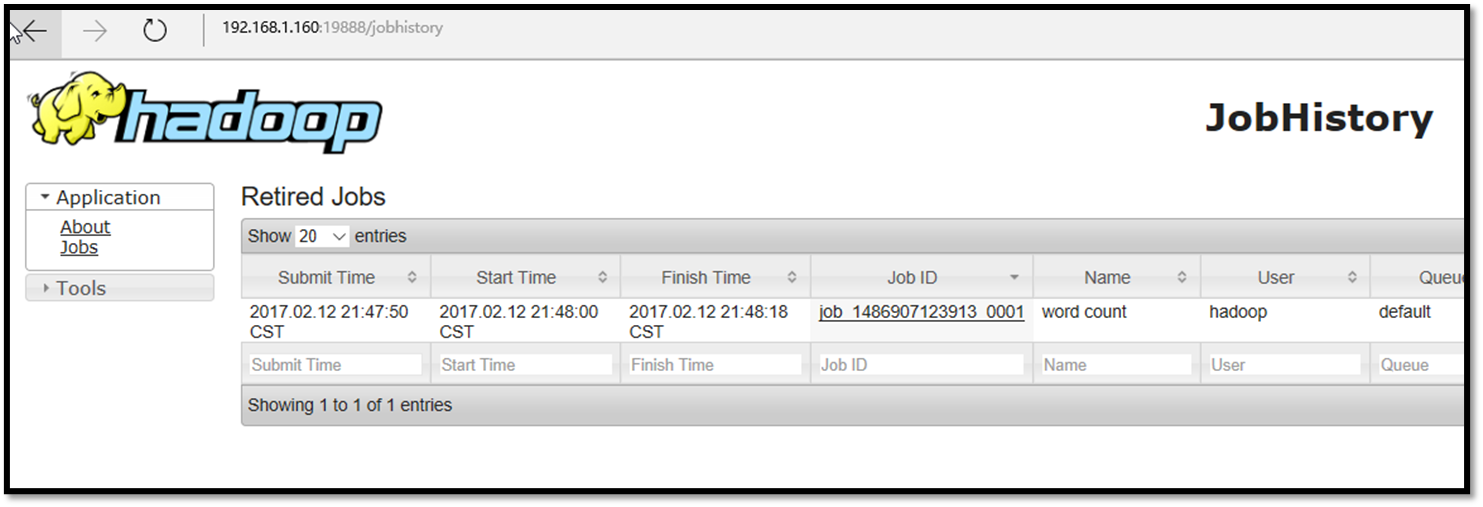

查看Jobhistory

hadoop@Ubuntu-01:~$ mr-jobhistory-daemon.sh start historyserver

starting historyserver, logging to /usr/local/hadoop/hadoop-2.7.3/logs/mapred-hadoop-historyserver-Ubuntu-01.out

hadoop@Ubuntu-01:~$ mr-jobhistory-daemon.sh stop historyserver

stopping historyserver

http://192.168.1.160:19888/jobhistory

运行wordcount程序

创建 input目录

hadoop@Ubuntu-01:~$ mkdir input

在input创建f1、f2并写内容

hadoop@Ubuntu-01:~$ cat input/f1

Hello world bye jj

hadoop@Ubuntu-01:~$ cat input/f2

Hello Hadoop bye Hadoop

在hdfs创建/tmp/input目录

hadoop@Ubuntu-01:~$ hadoop fs -mkdir /tmp

hadoop@Ubuntu-01:~$ hadoop fs -mkdir /tmp/input

将f1、f2文件copy到hdfs /tmp/input目录

hadoop@Ubuntu-01:~$ hadoop fs -put input/ /tmp

执行wordcount程序

hadoop@Ubuntu-01:~$ hadoop jar /usr/local/hadoop/hadoop-2.7.3/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar wordcount /tmp/input /output

17/02/12 21:47:48 INFO client.RMProxy: Connecting to ResourceManager at Ubuntu-01/192.168.1.160:8032

17/02/12 21:47:49 INFO input.FileInputFormat: Total input paths to process : 2

17/02/12 21:47:49 INFO mapreduce.JobSubmitter: number of splits:2

17/02/12 21:47:50 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1486907123913_0001

17/02/12 21:47:50 INFO impl.YarnClientImpl: Submitted application application_1486907123913_0001

17/02/12 21:47:50 INFO mapreduce.Job: The url to track the job: http://Ubuntu-01:8088/proxy/application_1486907123913_0001/

17/02/12 21:47:50 INFO mapreduce.Job: Running job: job_1486907123913_0001

17/02/12 21:48:02 INFO mapreduce.Job: Job job_1486907123913_0001 running in uber mode : false

17/02/12 21:48:02 INFO mapreduce.Job: map 0% reduce 0%

17/02/12 21:48:12 INFO mapreduce.Job: map 100% reduce 0%

17/02/12 21:48:20 INFO mapreduce.Job: map 100% reduce 100%

17/02/12 21:48:21 INFO mapreduce.Job: Job job_1486907123913_0001 completed successfully

17/02/12 21:48:21 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=84

FILE: Number of bytes written=357236

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=243

HDFS: Number of bytes written=36

HDFS: Number of read operations=9

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=2

Launched reduce tasks=1

Data-local map tasks=2

Total time spent by all maps in occupied slots (ms)=16865

Total time spent by all reduces in occupied slots (ms)=4585

Total time spent by all map tasks (ms)=16865

Total time spent by all reduce tasks (ms)=4585

Total vcore-milliseconds taken by all map tasks=16865

Total vcore-milliseconds taken by all reduce tasks=4585

Total megabyte-milliseconds taken by all map tasks=17269760

Total megabyte-milliseconds taken by all reduce tasks=4695040

Map-Reduce Framework

Map input records=2

Map output records=8

Map output bytes=75

Map output materialized bytes=90

Input split bytes=198

Combine input records=8

Combine output records=7

Reduce input groups=5

Reduce shuffle bytes=90

Reduce input records=7

Reduce output records=5

Spilled Records=14

Shuffled Maps =2

Failed Shuffles=0

Merged Map outputs=2

GC time elapsed (ms)=426

CPU time spent (ms)=1580

Physical memory (bytes) snapshot=508022784

Virtual memory (bytes) snapshot=5689307136

Total committed heap usage (bytes)=263376896

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=45

File Output Format Counters

Bytes Written=36

查看执行结果

hadoop@Ubuntu-01:~$ hadoop fs -cat /output/part-r-00000

Hadoop 2

Hello 2

bye 2

jj 1

world 1

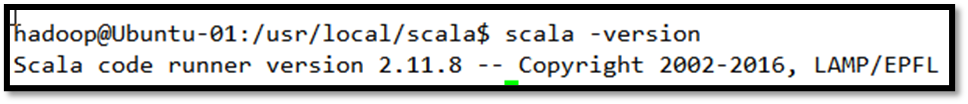

安装Scala

scala-2.11.8.tgz http://www.scala-lang.org/download/

hadoop@Ubuntu-01:~$ cd /usr/local

hadoop@Ubuntu-01:/usr/local$ sudo mkdir scala

hadoop@Ubuntu-01:/usr/local$ sudo chown hadoop:hadoop scala

hadoop@Ubuntu-01:/usr/local$ cd scala/

hadoop@Ubuntu-01:/usr/local/scala$ tar -zxvf scala-2.11.8.tgz

hadoop@Ubuntu-01:/usr/local/scala$ sudo vim /etc/profile

末尾加入:

export SCALA_HOME=/usr/local/scala/scala-2.11.8

export PATH=$PATH:$SCALA_HOME/bin

hadoop@Ubuntu-01:/usr/local/scala$ source /etc/profile

另外两台机器进行类似操作安装scala

安装Spark

http://spark.apache.org/

spark-2.1.0-bin-hadoop2.7.tgz

hadoop@Ubuntu-01:~$ cd /usr/local

hadoop@Ubuntu-01:/usr/local$ sudo mkdir spark

hadoop@Ubuntu-01:/usr/local$ sudo chown hadoop:hadoop spark

hadoop@Ubuntu-01:/usr/local$ cd spark/

hadoop@Ubuntu-01:/usr/local/spark$ tar -zxvf spark-2.1.0-bin-hadoop2.7.tgz

hadoop@Ubuntu-01:/usr/local/spark$ sudo vim /etc/profile

export SPARK_HOME=/usr/local/spark/spark-2.1.0-bin-hadoop2.7

export PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin

hadoop@Ubuntu-01:/usr/local/spark$ source /etc/profile

另外两台进行类似操作

配置

cd /usr/local/spark/conf

mv spark-env.sh.template spark-env.sh

vim spark-env.sh

HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

SPARK_MASTER_HOST=192.168.1.160

SPARK_MASTER_WEBUI_PORT=28686

SPARK_LOCAL_DIRS=/usr/local/spark/spark-2.1.0-bin-hadoop2.7/tmp/local

SPARK_WORKER_DIR=/usr/local/spark/spark-2.1.0-bin-hadoop2.7/tmp/worker

SPARK_DRIVER_MEMORY=4G

SPARK_WORKER_CORES=16

SPARK_WORKER_MEMORY=64g

SPARK_LOG_DIR=/usr/local/spark/spark-2.1.0-bin-hadoop2.7/tmp/logs

# 下面的配置主要用于jobhistory,非必须

SPARK_HISTORY_OPTS="-Dspark.history.ui.port=18080 -Dspark.history.retainedApplications=3 -Dspark.history.fs.logDirectory=hdfs://Ubuntu-01:9000/spark/log"

mv spark-defaults.conf.template spark-defaults.conf

vim spark-defaults.conf

#下面的配置主要用于jobhistory,非必须

spark.eventLog.enabled true

spark.eventLog.dir hdfs://Ubuntu-01:9000/spark/log

spark.eventLog.compress true

复制spark目录

hadoop@Ubuntu-01:/usr/local/spark$ scp -r spark-2.1.0-bin-hadoop2.7/ hadoop@Ubuntu-02:/usr/local/spark

hadoop@Ubuntu-01:/usr/local/spark$ scp -r spark-2.1.0-bin-hadoop2.7/ hadoop@Ubuntu-03:/usr/local/spark

验证

hadoop@Ubuntu-01:~$ start-dfs.sh

Starting namenodes on [Ubuntu-01]

Ubuntu-01: starting namenode, logging to /usr/local/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-namenode-Ubuntu-01.out

Ubuntu-02: starting datanode, logging to /usr/local/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-datanode-Ubuntu-02.out

Ubuntu-03: starting datanode, logging to /usr/local/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-datanode-Ubuntu-03.out

Starting secondary namenodes [Ubuntu-01]

Ubuntu-01: starting secondarynamenode, logging to /usr/local/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-secondarynamenode-Ubuntu-01.out

hadoop@Ubuntu-01:~$ jps

5057 SecondaryNameNode

4846 NameNode

5166 Jps

hadoop@Ubuntu-01:~$ start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /usr/local/hadoop/hadoop-2.7.3/logs/yarn-hadoop-resourcemanager-Ubuntu-01.out

Ubuntu-02: starting nodemanager, logging to /usr/local/hadoop/hadoop-2.7.3/logs/yarn-hadoop-nodemanager-Ubuntu-02.out

Ubuntu-03: starting nodemanager, logging to /usr/local/hadoop/hadoop-2.7.3/logs/yarn-hadoop-nodemanager-Ubuntu-03.out

hadoop@Ubuntu-01:~$ jps

5568 Jps

5057 SecondaryNameNode

5223 ResourceManager

4846 NameNode

hadoop@Ubuntu-01:~$ start-master.sh

starting org.apache.spark.deploy.master.Master, logging to /usr/local/spark/spark-2.1.0-bin-hadoop2.7/tmp/logs/spark-hadoop-org.apache.spark.deploy.master.Master-1-Ubuntu-01.out

hadoop@Ubuntu-01:~$ jps

5649 Jps

5057 SecondaryNameNode

5223 ResourceManager

5597 Master

4846 NameNode

hadoop@Ubuntu-01:~$ start-slave.sh 192.168.1.160:7077

starting org.apache.spark.deploy.worker.Worker, logging to /usr/local/spark/spark-2.1.0-bin-hadoop2.7/tmp/logs/spark-hadoop-org.apache.spark.deploy.worker.Worker-1-Ubuntu-01.out

hadoop@Ubuntu-01:~$ jps

5057 SecondaryNameNode

5223 ResourceManager

5672 Worker

5720 Jps

5597 Master

4846 NameNode

hadoop@Ubuntu-01:~$ start-history-server.sh

starting org.apache.spark.deploy.history.HistoryServer, logging to /usr/local/spark/spark-2.1.0-bin-hadoop2.7/tmp/logs/spark-hadoop-org.apache.spark.deploy.history.HistoryServer-1-Ubuntu-01.out

hadoop@Ubuntu-01:~$ run-example org.apache.spark.examples.SparkPi 2>%1 | grep "Pi is roughly"

Pi is roughly 3.140195700978505

hadoop@Ubuntu-01:~$ spark-submit $SPARK_HOME/examples/src/main/python/pi.py 2>%1 | grep "Pi is roughly"

Pi is roughly 3.146920

hadoop@Ubuntu-01:~$ spark-shell

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

17/02/15 22:30:19 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

17/02/15 22:30:29 WARN metastore.ObjectStore: Version information not found in metastore. hive.metastore.schema.verification is not enabled so recording the schema version 1.2.0

17/02/15 22:30:29 WARN metastore.ObjectStore: Failed to get database default, returning NoSuchObjectException

17/02/15 22:30:32 WARN metastore.ObjectStore: Failed to get database global_temp, returning NoSuchObjectException

Spark context Web UI available at http://192.168.1.160:4040

Spark context available as 'sc' (master = local[*], app id = local-1487169020439).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.1.0

/_/

Using Scala version 2.11.8 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_121)

Type in expressions to have them evaluated.

Type :help for more information.

scala> val textFile =sc.textFile("file:///usr/local/spark/spark-2.1.0-bin-hadoop2.7/README.md");

textFile: org.apache.spark.rdd.RDD[String] = file:///usr/local/spark/spark-2.1.0-bin-hadoop2.7/README.md MapPartitionsRDD[1] at textFile at <console>:24

scala> textFile.count();

res8: Long = 104

scala> textFile.first();

res9: String = # Apache Spark

scala> val linesWithSpark = textFile.filter(line=> line.contains("Spark"));

linesWithSpark: org.apache.spark.rdd.RDD[String] = MapPartitionsRDD[2] at filter at <console>:26

scala> linesWithSpark.count();

res10: Long = 20

scala> textFile.filter(line =>line.contains("Spark")).count();

res11: Long = 20

scala>

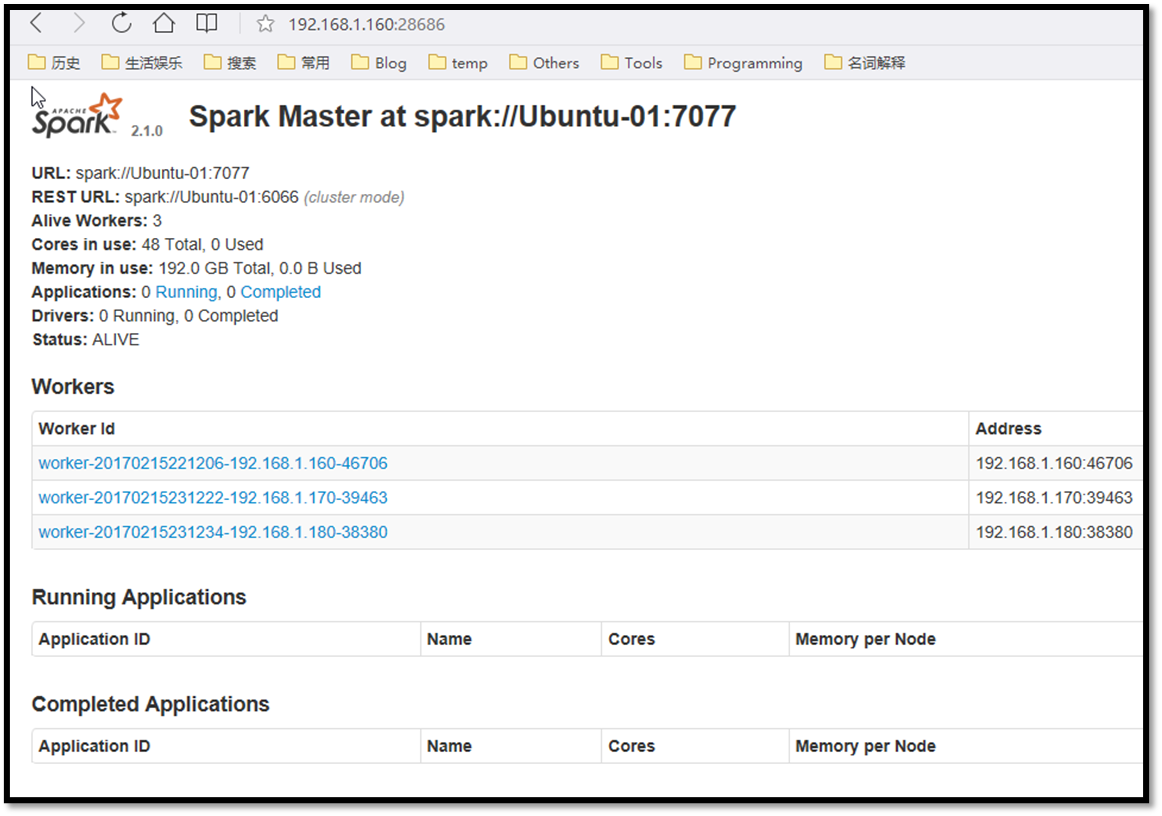

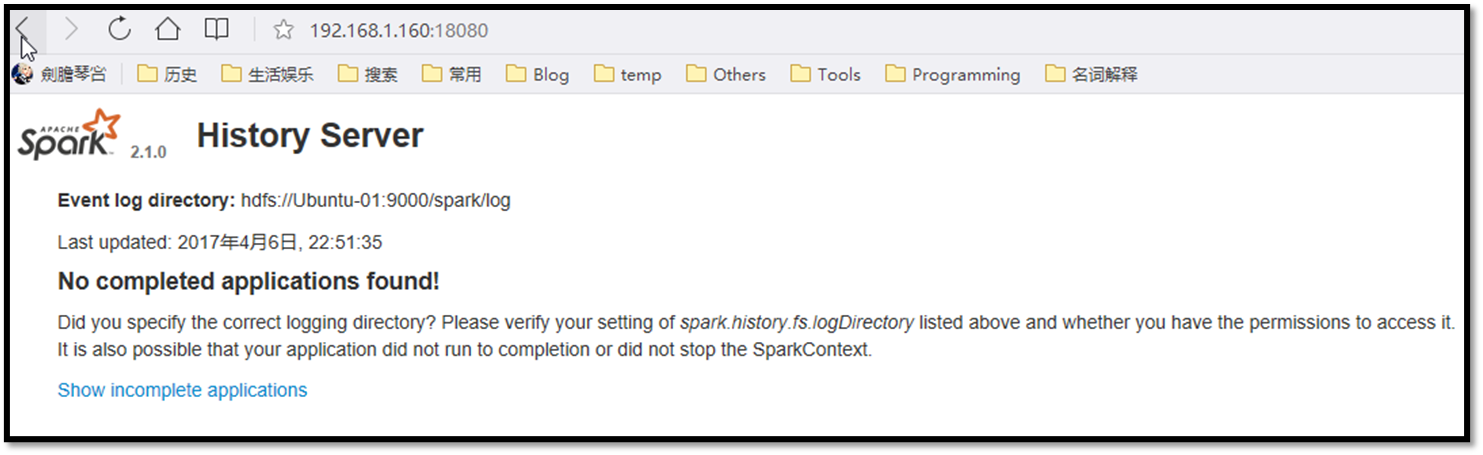

前台界面

SPARK_MASTER_WEBUI: http://192.168.1.160:28686/

SPARK_HISTORY:http://192.168.1.160:18080/

关闭顺序

hadoop@Ubuntu-01:~$ stop-history-server.sh

stopping org.apache.spark.deploy.history.HistoryServer

hadoop@Ubuntu-01:~$ stop-slaves.sh

Ubuntu-02: stopping org.apache.spark.deploy.worker.Worker

Ubuntu-03: stopping org.apache.spark.deploy.worker.Worker

Ubuntu-01: stopping org.apache.spark.deploy.worker.Worker

hadoop@Ubuntu-01:~$ stop-master.sh

stopping org.apache.spark.deploy.master.Master

hadoop@Ubuntu-01:~$ stop-yarn.sh

stopping yarn daemons

stopping resourcemanager

Ubuntu-03: stopping nodemanager

Ubuntu-02: stopping nodemanager

no proxyserver to stop

hadoop@Ubuntu-01:~$ stop-dfs.sh

Stopping namenodes on [Ubuntu-01]

Ubuntu-01: stopping namenode

Ubuntu-02: stopping datanode

Ubuntu-03: stopping datanode

Stopping secondary namenodes [Ubuntu-01]

Ubuntu-01: stopping secondarynamenode

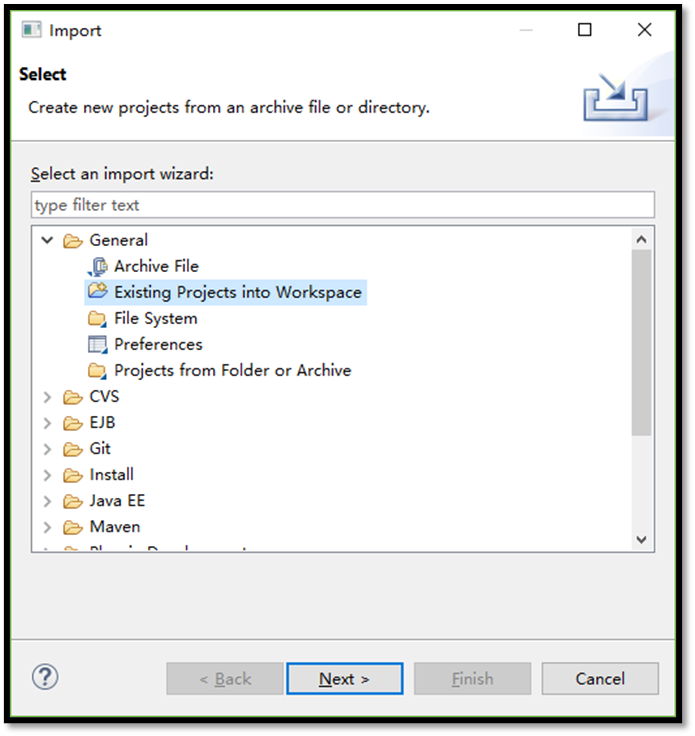

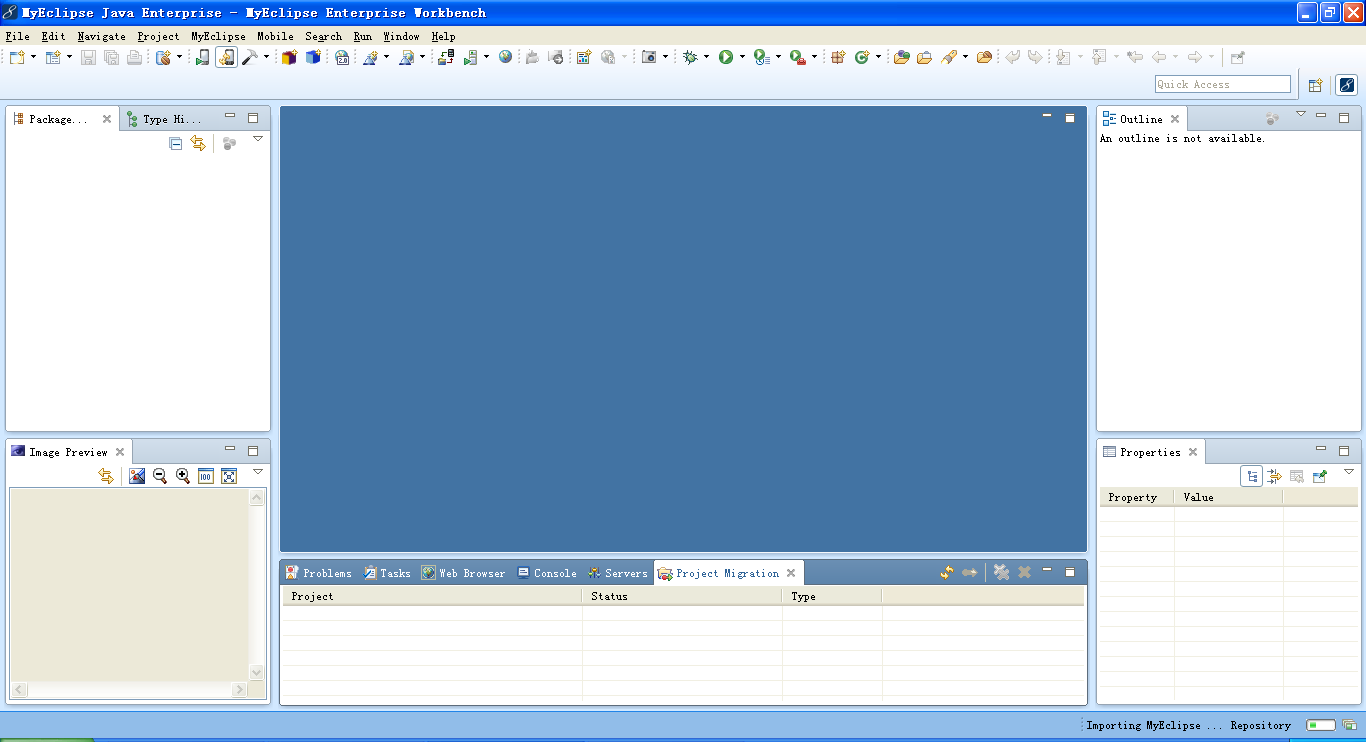

Scala-IDE搭建Spark源码分析环境

下载、解压Scala-IDE,下载地址为: http://scala-ide.org/index.html scala-SDK-4.5.0-vfinal-2.11-win32.win32.x86_64.zip

下载、安装SBT,下载地址为: http://www.scala-sbt.org/ sbt-0.13.13.1.msi

在github上下载最新的Spark源码:

PS D:\temp\Scala> git clone https://github.com/apache/spark.git

PS D:\temp\Scala> cd .\spark\

输入sbt命令,首次会下载很多东西,速度比较慢,某些在墙外,所以需要提前搭建VPN。如果出错可以多执行几遍

PS D:\temp\Scala\spark> sbt

ignoring option MaxPermSize=256m; support was removed in 8.0

[info] Loading project definition from D:\temp\Scala\spark\project

[info] Resolving key references (18753 settings) ...

[info] Set current project to spark-parent (in build file:/D:/temp/Scala/spark/)

在sbt提示符下输入eclipse,首次会下载很多东西,速度比较慢。如果出错多执行几遍。

> eclipse

[info] About to create Eclipse project files for your project(s).

[info] Resolving jline#jline;2.12.1 ...

[info] Successfully created Eclipse project files for project(s):

[info] spark-sql

[info] spark-sql-kafka-0-10

[info] spark-streaming-kafka-0-8-assembly

[info] spark-examples

[info] spark-streaming

[info] spark-mllib

[info] spark-catalyst

[info] spark-streaming-kafka-0-10-assembly

[info] spark-graphx

[info] spark-streaming-flume-sink

[info] spark-tags

[info] spark-assembly

[info] spark-mllib-local

[info] spark-tools

[info] spark-repl

[info] spark-streaming-flume-assembly

[info] spark-streaming-kafka-0-8

[info] old-deps

[info] spark-network-common

[info] spark-hive

[info] spark-streaming-flume

[info] spark-sketch

[info] spark-network-shuffle

[info] spark-core

[info] spark-unsafe

[info] spark-streaming-kafka-0-10

[info] spark-launcher

> exit

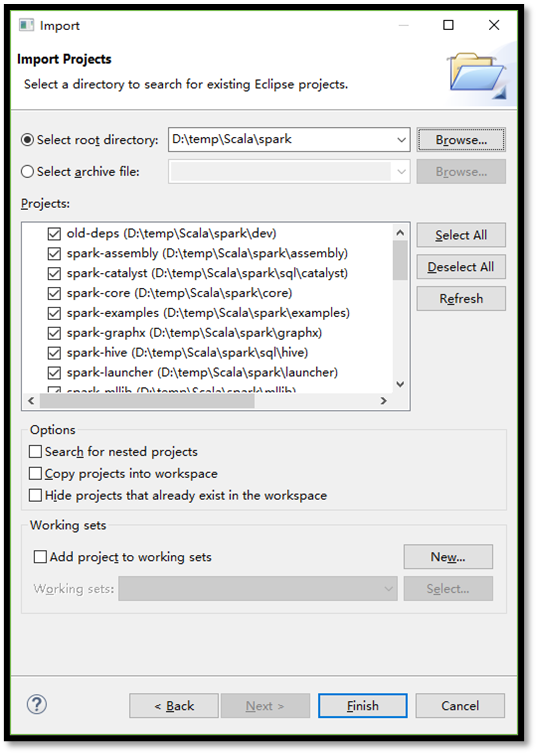

在Scala-IDE导入spark项目:

安装Zookeeper

https://zookeeper.apache.org/

zookeeper-3.4.9.tar.gz

hadoop@Ubuntu-01:~$ cd /usr/local

hadoop@Ubuntu-01:/usr/local$ sudo mkdir zookeeper

hadoop@Ubuntu-01:/usr/local$ sudo chown hadoop:hadoop zookeeper/

hadoop@Ubuntu-01:/usr/local$ cd zookeeper/

hadoop@Ubuntu-01:/usr/local/zookeeper$ tar -zxvf zookeeper-3.4.9.tar.gz

hadoop@Ubuntu-01:/usr/local/zookeeper$ sudo vim /etc/profile

export ZOOKEEPER_HOME=/usr/local/zookeeper/zookeeper-3.4.9

export PATH=$PATH:$ZOOKEEPER_HOME/bin

hadoop@Ubuntu-01:/usr/local/zookeeper$ source /etc/profile

hadoop@Ubuntu-01:/usr/local/zookeeper/ $ cd zookeeper-3.4.9/conf

hadoop@Ubuntu-01:/usr/local/zookeeper/zookeeper-3.4.9/conf$ cp zoo_sample.cfg zoo.cfg

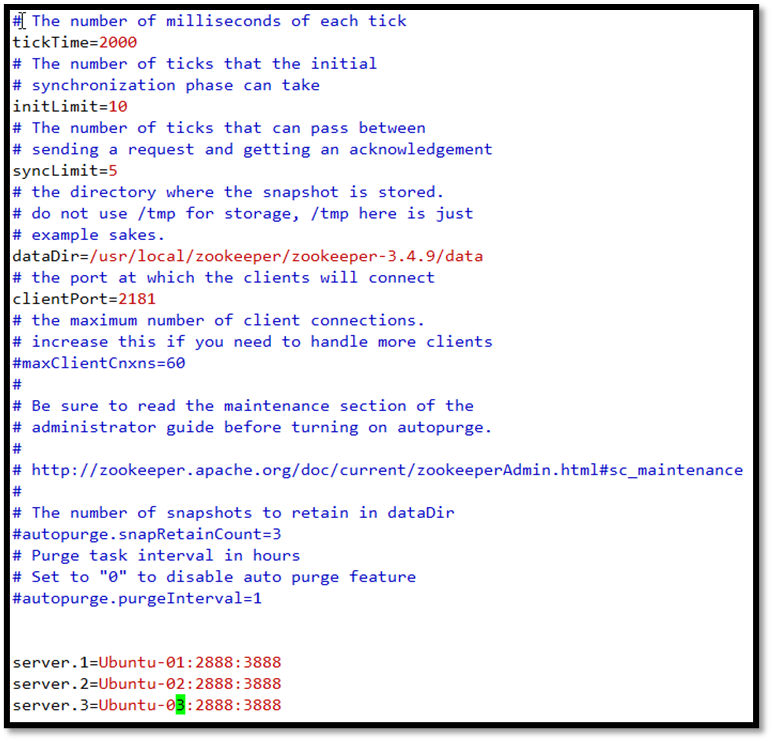

hadoop@Ubuntu-01:/usr/local/zookeeper/zookeeper-3.4.9/conf$ vim zoo.cfg

将dataDir改为dataDir=/usr/local/zookeeper/zookeeper-3.4.9/data(该目录需要新建),在文件末位添加所有的主机,注意server后面的数字需要与myid文件中的数字保持一致。

hadoop@Ubuntu-01:/usr/local/zookeeper/zookeeper-3.4.9$ mkdir data

hadoop@Ubuntu-01:/usr/local/zookeeper/zookeeper-3.4.9$ cd data/

hadoop@Ubuntu-01:/usr/local/zookeeper/zookeeper-3.4.9/data$ vim myid

myid中写入1

对Ubuntu-02与Ubuntu-03机器进行类似操作,在各主机myid文件中写入各自的编号。

hadoop@Ubuntu-01:/usr/local/zookeeper$ scp -r zookeeper-3.4.9/ hadoop@Ubuntu-02:/usr/local/zookeeper/

hadoop@Ubuntu-01:/usr/local/zookeeper$ scp -r zookeeper-3.4.9/ hadoop@Ubuntu-03:/usr/local/zookeeper/

验证

在每台zookeeper机器上执行:

hadoop@Ubuntu-01:~$ zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/zookeeper-3.4.9/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

hadoop@Ubuntu-02:~$ zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/zookeeper-3.4.9/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

hadoop@Ubuntu-03:~$ zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/zookeeper-3.4.9/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

hadoop@Ubuntu-01:~$ zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/zookeeper-3.4.9/bin/../conf/zoo.cfg

Mode: follower

hadoop@Ubuntu-02:~$ zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/zookeeper-3.4.9/bin/../conf/zoo.cfg

Mode: leader

hadoop@Ubuntu-03:~$ zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/zookeeper-3.4.9/bin/../conf/zoo.cfg

Mode: follower

hadoop@Ubuntu-01:~$ zkCli.sh -server Ubuntu-01:2181

Connecting to Ubuntu-01:2181

2017-02-18 22:32:23,226 [myid:] - INFO [main:Environment@100] - Client environment:zookeeper.version=3.4.9-1757313, built on 08/23/2016 06:50 GMT

2017-02-18 22:32:23,231 [myid:] - INFO [main:Environment@100] - Client environment:host.name=Ubuntu-01

2017-02-18 22:32:23,231 [myid:] - INFO [main:Environment@100] - Client environment:java.version=1.8.0_121

2017-02-18 22:32:23,233 [myid:] - INFO [main:Environment@100] - Client environment:java.vendor=Oracle Corporation

2017-02-18 22:32:23,233 [myid:] - INFO [main:Environment@100] - Client environment:java.home=/usr/local/java/jdk1.8.0_121/jre

2017-02-18 22:32:23,233 [myid:] - INFO [main:Environment@100] - Client environment:java.class.path=/usr/local/zookeeper/zookeeper-3.4.9/bin/../build/classes:/usr/local/zookeeper/zookeeper-3.4.9/bin/../build/lib/*.jar:/usr/local/zookeeper/zookeeper-3.4.9/bin/../lib/slf4j-log4j12-1.6.1.jar:/usr/local/zookeeper/zookeeper-3.4.9/bin/../lib/slf4j-api-1.6.1.jar:/usr/local/zookeeper/zookeeper-3.4.9/bin/../lib/netty-3.10.5.Final.jar:/usr/local/zookeeper/zookeeper-3.4.9/bin/../lib/log4j-1.2.16.jar:/usr/local/zookeeper/zookeeper-3.4.9/bin/../lib/jline-0.9.94.jar:/usr/local/zookeeper/zookeeper-3.4.9/bin/../zookeeper-3.4.9.jar:/usr/local/zookeeper/zookeeper-3.4.9/bin/../src/java/lib/*.jar:/usr/local/zookeeper/zookeeper-3.4.9/bin/../conf:.:/usr/local/java/jdk1.8.0_121/lib:.:/usr/local/java/jdk1.8.0_121/lib:.:/usr/local/java/jdk1.8.0_121/lib:

2017-02-18 22:32:23,234 [myid:] - INFO [main:Environment@100] - Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

2017-02-18 22:32:23,234 [myid:] - INFO [main:Environment@100] - Client environment:java.io.tmpdir=/tmp

2017-02-18 22:32:23,234 [myid:] - INFO [main:Environment@100] - Client environment:java.compiler=<NA>

2017-02-18 22:32:23,235 [myid:] - INFO [main:Environment@100] - Client environment:os.name=Linux

2017-02-18 22:32:23,235 [myid:] - INFO [main:Environment@100] - Client environment:os.arch=amd64

2017-02-18 22:32:23,235 [myid:] - INFO [main:Environment@100] - Client environment:os.version=4.4.0-62-generic

2017-02-18 22:32:23,235 [myid:] - INFO [main:Environment@100] - Client environment:user.name=hadoop

2017-02-18 22:32:23,236 [myid:] - INFO [main:Environment@100] - Client environment:user.home=/home/hadoop

2017-02-18 22:32:23,236 [myid:] - INFO [main:Environment@100] - Client environment:user.dir=/home/hadoop

2017-02-18 22:32:23,237 [myid:] - INFO [main:ZooKeeper@438] - Initiating client connection, connectString=Ubuntu-01:2181 sessionTimeout=30000 watcher=org.apache.zookeeper.ZooKeeperMain$MyWatcher@531d72ca

Welcome to ZooKeeper!

2017-02-18 22:32:23,268 [myid:] - INFO [main-SendThread(Ubuntu-01:2181):ClientCnxn$SendThread@1032] - Opening socket connection to server Ubuntu-01/192.168.1.160:2181. Will not attempt to authenticate using SASL (unknown error)

JLine support is enabled

2017-02-18 22:32:23,398 [myid:] - INFO [main-SendThread(Ubuntu-01:2181):ClientCnxn$SendThread@876] - Socket connection established to Ubuntu-01/192.168.1.160:2181, initiating session

2017-02-18 22:32:23,429 [myid:] - INFO [main-SendThread(Ubuntu-01:2181):ClientCnxn$SendThread@1299] - Session establishment complete on server Ubuntu-01/192.168.1.160:2181, sessionid = 0x15a51a0e47d0000, negotiated timeout = 30000

WATCHER::

WatchedEvent state:SyncConnected type:None path:null

[zk: Ubuntu-01:2181(CONNECTED) 0] quit

Quitting...

2017-02-18 22:32:34,993 [myid:] - INFO [main-EventThread:ClientCnxn$EventThread@519] - EventThread shut down for session: 0x15a51a0e47d0000

2017-02-18 22:32:34,994 [myid:] - INFO [main:ZooKeeper@684] - Session: 0x15a51a0e47d0000 closed

hadoop@Ubuntu-01:~$ echo ruok| nc Ubuntu-01 2181

imokhadoop@Ubuntu-01:~$

安装HBase

下载地址:http://hbase.apache.org/ 安装版本:hbase-1.2.4-bin.tar.gz

hadoop@Ubuntu-01:~$ cd /usr/local

hadoop@Ubuntu-01:/usr/local$ sudo mkdir hbase

hadoop@Ubuntu-01:/usr/local$ sudo chown hadoop:hadoop hbase/

hadoop@Ubuntu-01:/usr/local$ cd hbase/

hadoop@Ubuntu-01:/usr/local/hbase$ tar -zxvf hbase-1.2.4-bin.tar.gz

hadoop@Ubuntu-01:/usr/local/spark$ sudo vim /etc/profile

export HBASE_HOME=/usr/local/hbase/hbase-1.2.4

export PATH=$PATH:$HBASE_HOME/bin

export HBASE_CLASSPATH=$HBASE_HOME/lib

hadoop@Ubuntu-01:/usr/local/spark$ source /etc/profile

hadoop@Ubuntu-01:/usr/local/hbase$ cd hbase-1.2.4/conf

hadoop@Ubuntu-01:/usr/local/hbase/hbase-1.2.4/conf$ vim hbase-env.sh

取消JAVA_HOME和HBASE_MANAGES_ZK项的注释,并设置正确值。

export JAVA_HOME=/usr/local/java/jdk1.8.0_121

export HBASE_MANAGES_ZK=false

hadoop@Ubuntu-01:/usr/local/hbase/hbase-1.2.4/conf$ vim hbase-site.xml

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://Ubuntu-01:9000/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>Ubuntu-01,Ubuntu-02,Ubuntu-03</value>

</property>

</configuration>

复制到另外两个节点

hadoop@Ubuntu-01:/usr/local/hbase$ scp -r hbase-1.2.4 hadoop@Ubuntu-02:/usr/local/hbase/

hadoop@Ubuntu-01:/usr/local/hbase$ scp -r hbase-1.2.4 hadoop@Ubuntu-03:/usr/local/hbase/

验证

启动

除了zkServer.sh start要在所有节点执行外,其余启动命令只需要在主节点执行

hadoop@Ubuntu-01:~$ start-dfs.sh

Starting namenodes on [Ubuntu-01]

Ubuntu-01: starting namenode, logging to /usr/local/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-namenode-Ubuntu-01.out

Ubuntu-02: starting datanode, logging to /usr/local/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-datanode-Ubuntu-02.out

Ubuntu-03: starting datanode, logging to /usr/local/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-datanode-Ubuntu-03.out

Starting secondary namenodes [Ubuntu-01]

Ubuntu-01: starting secondarynamenode, logging to /usr/local/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-secondarynamenode-Ubuntu-01.out

hadoop@Ubuntu-01:~$ jps

10835 Jps

10518 NameNode

4299 SecondaryNameNode

hadoop@Ubuntu-01:~$ zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/zookeeper-3.4.9/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

hadoop@Ubuntu-01:~$ jps

10518 NameNode

10854 QuorumPeerMain

4299 SecondaryNameNode

10879 Jps

hadoop@Ubuntu-01:~$ start-hbase.sh

starting master, logging to /usr/local/hbase/hbase-1.2.4/logs/hbase-hadoop-master-Ubuntu-01.out

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

hadoop@Ubuntu-01:~$ jps

10518 NameNode

10854 QuorumPeerMain

11015 HMaster

11191 Jps

4299 SecondaryNameNode

hadoop@Ubuntu-01:~$ hbase shell

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/hbase/hbase-1.2.4/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

HBase Shell; enter 'help<RETURN>' for list of supported commands.

Type "exit<RETURN>" to leave the HBase Shell

Version 1.2.4, r67592f3d062743907f8c5ae00dbbe1ae4f69e5af, Tue Oct 25 18:10:20 CDT 2016

hbase(main):001:0>

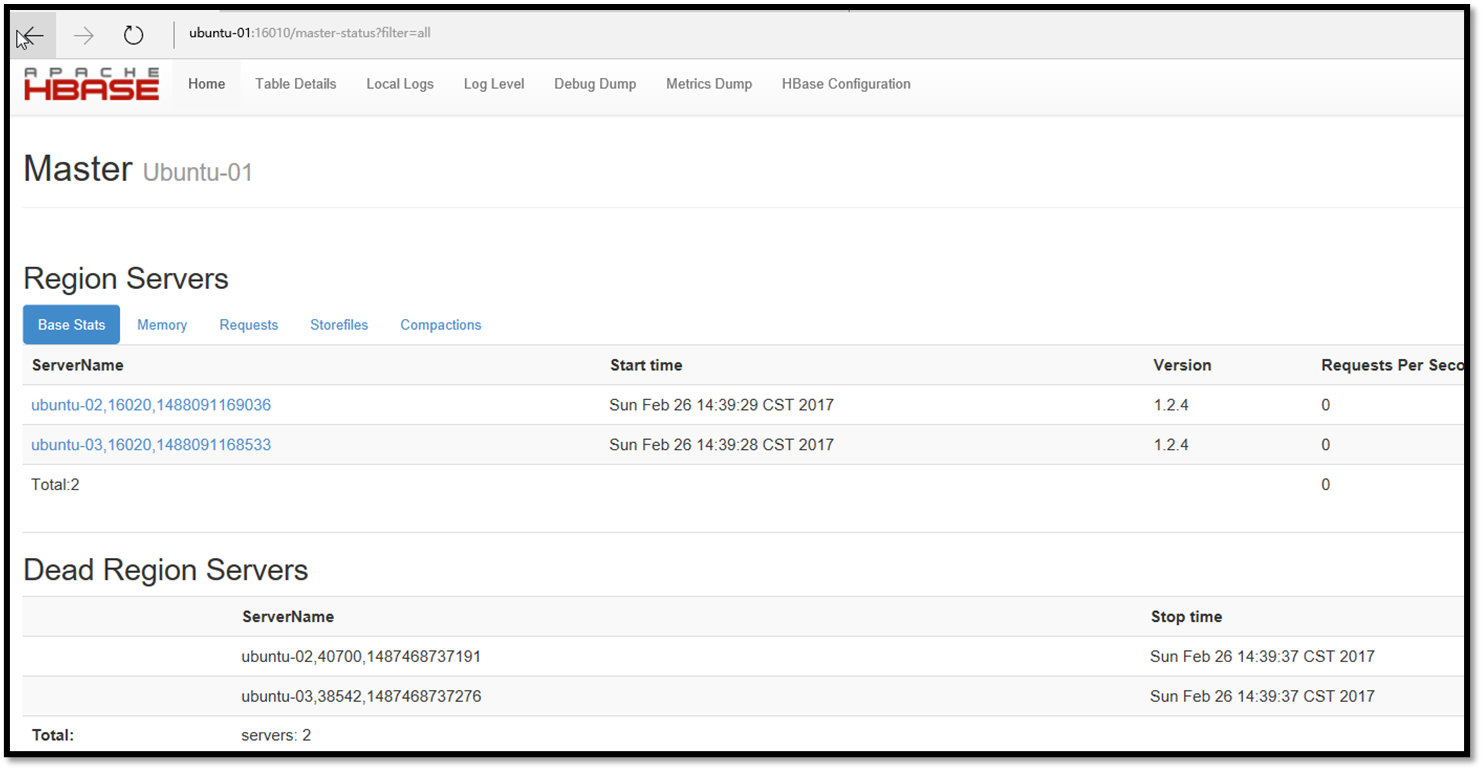

查看hbase管理界面

http://ubuntu-01:16010/

关闭

除了zkServer.sh stop要在所有节点执行外,其余关闭命令只需要在主节点执行

hadoop@Ubuntu-01:~$ jps

11297 Jps

10518 NameNode

10854 QuorumPeerMain

11015 HMaster

4299 SecondaryNameNode

hadoop@Ubuntu-01:~$ stop-hbase.sh

stopping hbase................

hadoop@Ubuntu-01:~$ jps

10518 NameNode

10854 QuorumPeerMain

4299 SecondaryNameNode

11516 Jps

hadoop@Ubuntu-01:~$ zkServer.sh stop

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/zookeeper-3.4.9/bin/../conf/zoo.cfg

Stopping zookeeper ... STOPPED

hadoop@Ubuntu-01:~$ jps

11537 Jps

10518 NameNode

4299 SecondaryNameNode

hadoop@Ubuntu-01:~$ stop-dfs.sh

Stopping namenodes on [Ubuntu-01]

Ubuntu-01: stopping namenode

Ubuntu-02: stopping datanode

Ubuntu-03: stopping datanode

Stopping secondary namenodes [Ubuntu-01]

hadoop@Ubuntu-01:~$ jps

11880 Jps

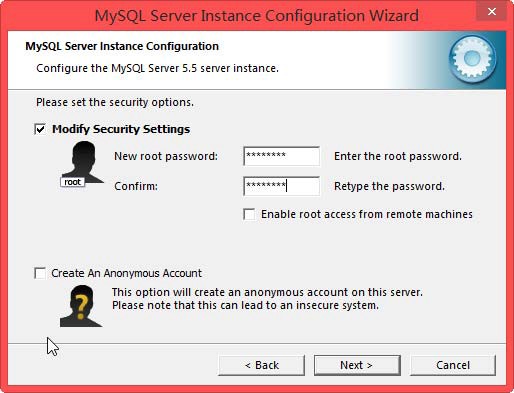

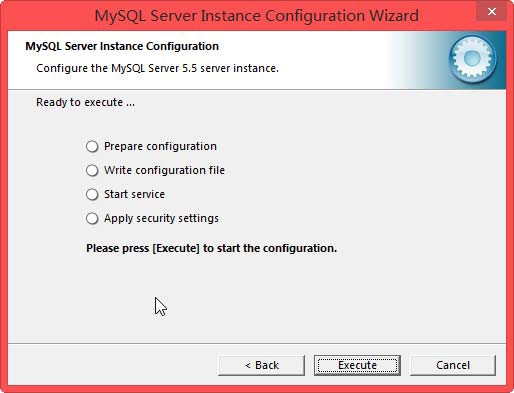

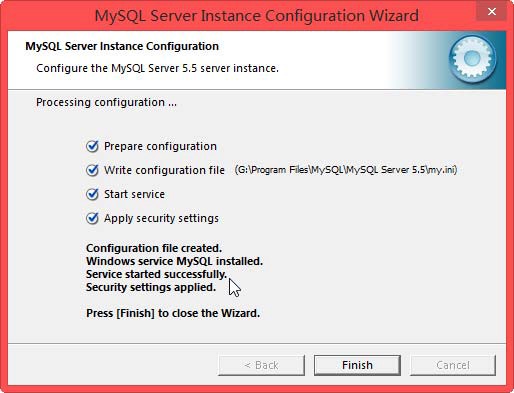

安装Hive

http://hive.apache.org/

apache-hive-2.1.1-bin.tar.gz

hadoop@Ubuntu-01:~$ cd /usr/local

hadoop@Ubuntu-01:/usr/local$ sudo mkdir hive

hadoop@Ubuntu-01:/usr/local$ sudo chown hadoop:hadoop hive/

hadoop@Ubuntu-01:/usr/local$ cd hive/

hadoop@Ubuntu-01:/usr/local/hive$ tar -zxvf apache-hive-2.1.1-bin.tar.gz

hadoop@Ubuntu-01:/usr/local/hive$ sudo vim /etc/profile

export HIVE_HOME=/usr/local/hive/apache-hive-2.1.1-bin

export PATH=$PATH:$HIVE_HOME/bin

hadoop@Ubuntu-01:/usr/local/hive$ source /etc/profile

hadoop@Ubuntu-01:/usr/local/hive$ cd apache-hive-2.1.1-bin/conf/

hadoop@Ubuntu-01:/usr/local/hive/apache-hive-2.1.1-bin/conf$ cp hive-default.xml.template hive-site.xml

hadoop@Ubuntu-01:/usr/local/hive/apache-hive-2.1.1-bin/conf$ vim hive-site.xml

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://192.168.1.100:3306/hive?createDatabaseIfNotExist=true</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hive</value>

<description>username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>hive</value>

</property>

<property>

<name>hive.hwi.listen.port</name>

<value>9999</value>

<description>This is the port the Hive Web Interface will listen on</description>

</property>

<property>

<name>datanucleus.autoCreateSchema</name>

<value>false</value>

</property>

<property>

<name>datanucleus.fixedDatastore</name>

<value>true</value>

</property>

<property>

<name>hive.metastore.local</name>

<value>true</value>

<description>controls whether to connect to remove metastore server or open a new metastore server in Hive Client JVM</description>

</property>

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

<description>

Enforce metastore schema version consistency.

True: Verify that version information stored in metastore matches with one from Hive jars. Also disable automatic

schema migration attempt. Users are required to manully migrate schema after Hive upgrade which ensures

proper metastore schema migration. (Default)

False: Warn if the version information stored in metastore doesn't match with one from in Hive jars.

</description>

</property>

</configuration>

下载mysql连接java的驱动 并拷入hive home的lib下(mysql-connector-java-5.1.38.jar)

hadoop@Ubuntu-01:~$ schematool -initSchema -dbType mysql

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/hive/apache-hive-2.1.1-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:mysql://192.168.1.100:3306/hive?createDatabaseIfNotExist=true

Metastore Connection Driver : com.mysql.jdbc.Driver

Metastore connection User: hive

Starting metastore schema initialization to 2.1.0

Initialization script hive-schema-2.1.0.mysql.sql

Initialization script completed

schemaTool completed

hadoop@Ubuntu-01:~$ start-dfs.sh

Starting namenodes on [Ubuntu-01]

Ubuntu-01: starting namenode, logging to /usr/local/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-namenode-Ubuntu-01.out

Ubuntu-02: starting datanode, logging to /usr/local/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-datanode-Ubuntu-02.out

Ubuntu-03: starting datanode, logging to /usr/local/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-datanode-Ubuntu-03.out

Starting secondary namenodes [Ubuntu-01]

Ubuntu-01: starting secondarynamenode, logging to /usr/local/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-secondarynamenode-Ubuntu-01.out

hadoop@Ubuntu-01:~$ hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/hive/apache-hive-2.1.1-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Logging initialized using configuration in jar:file:/usr/local/hive/apache-hive-2.1.1-bin/lib/hive-common-2.1.1.jar!/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

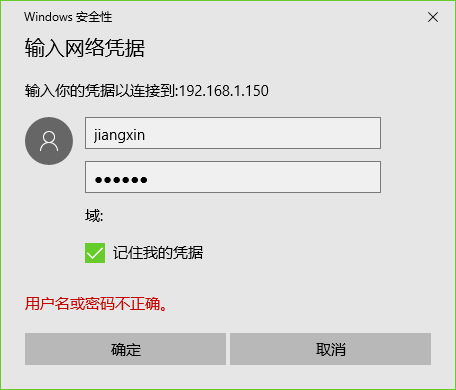

hive>

create database hive;

-- 创建hive用户,并授权

grant all on hive.* to hive@'%' identified by 'hive';

flush privileges;

安装PIG

http://pig.apache.org/ pig-0.16.0.tar.gz

hadoop@Ubuntu-01:~$ cd /usr/local

hadoop@Ubuntu-01:/usr/local$ sudo mkdir pig

hadoop@Ubuntu-01:/usr/local$ sudo chown hadoop:hadoop pig/

hadoop@Ubuntu-01:/usr/local$ cd pig/

hadoop@Ubuntu-01:/usr/local/pig$ tar -zxvf pig-0.16.0.tar.gz

hadoop@Ubuntu-01:/usr/local/pig$ sudo vim /etc/profile

export PIG_HOME=/usr/local/pig/pig-0.16.0

export PATH=$PATH:$PIG_HOME/bin

export PIG_CLASSPATH=$HADDOP_HOME/conf

hadoop@Ubuntu-01:/usr/local/pig$ source /etc/profile

测试

hadoop@Ubuntu-01:~$ pig -x local

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hbase/hbase-1.2.4/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

17/02/24 22:26:57 INFO pig.ExecTypeProvider: Trying ExecType : LOCAL

17/02/24 22:26:57 INFO pig.ExecTypeProvider: Picked LOCAL as the ExecType

2017-02-24 22:26:57,715 [main] INFO org.apache.pig.Main - Apache Pig version 0.16.0 (r1746530) compiled Jun 01 2016, 23:10:49

2017-02-24 22:26:57,715 [main] INFO org.apache.pig.Main - Logging error messages to: /home/hadoop/pig_1487946417711.log

2017-02-24 22:26:57,754 [main] INFO org.apache.pig.impl.util.Utils - Default bootup file /home/hadoop/.pigbootup not found

2017-02-24 22:26:58,045 [main] INFO org.apache.hadoop.conf.Configuration.deprecation - mapred.job.tracker is deprecated. Instead, use mapreduce.jobtracker.address

2017-02-24 22:26:58,046 [main] INFO org.apache.hadoop.conf.Configuration.deprecation - fs.default.name is deprecated. Instead, use fs.defaultFS

2017-02-24 22:26:58,048 [main] INFO org.apache.pig.backend.hadoop.executionengine.HExecutionEngine - Connecting to hadoop file system at: file:///

2017-02-24 22:26:58,344 [main] INFO org.apache.hadoop.conf.Configuration.deprecation - io.bytes.per.checksum is deprecated. Instead, use dfs.bytes-per-checksum

2017-02-24 22:26:58,374 [main] INFO org.apache.pig.PigServer - Pig Script ID for the session: PIG-default-7efc5f81-68bd-4e67-aa0d-30882be88c11

2017-02-24 22:26:58,375 [main] WARN org.apache.pig.PigServer - ATS is disabled since yarn.timeline-service.enabled set to false

grunt> quit

2017-02-24 22:30:50,757 [main] INFO org.apache.pig.Main - Pig script completed in 3 minutes, 53 seconds and 947 milliseconds (233947 ms)

hadoop@Ubuntu-01:~$ pig

17/02/24 22:32:52 INFO pig.ExecTypeProvider: Trying ExecType : LOCAL

17/02/24 22:32:52 INFO pig.ExecTypeProvider: Trying ExecType : MAPREDUCE

17/02/24 22:32:52 INFO pig.ExecTypeProvider: Picked MAPREDUCE as the ExecType

2017-02-24 22:32:52,844 [main] INFO org.apache.pig.Main - Apache Pig version 0.16.0 (r1746530) compiled Jun 01 2016, 23:10:49

2017-02-24 22:32:52,845 [main] INFO org.apache.pig.Main - Logging error messages to: /home/hadoop/pig_1487946772836.log

2017-02-24 22:32:52,890 [main] INFO org.apache.pig.impl.util.Utils - Default bootup file /home/hadoop/.pigbootup not found

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/hadoop/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hbase/hbase-1.2.4/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

2017-02-24 22:32:53,865 [main] INFO org.apache.hadoop.conf.Configuration.deprecation - mapred.job.tracker is deprecated. Instead, use mapreduce.jobtracker.address

2017-02-24 22:32:53,866 [main] INFO org.apache.hadoop.conf.Configuration.deprecation - fs.default.name is deprecated. Instead, use fs.defaultFS

2017-02-24 22:32:53,866 [main] INFO org.apache.pig.backend.hadoop.executionengine.HExecutionEngine - Connecting to hadoop file system at: hdfs://Ubuntu-01:9000

2017-02-24 22:32:54,752 [main] INFO org.apache.pig.PigServer - Pig Script ID for the session: PIG-default-aeeb7102-d88a-4154-9876-d76ff0da3a8c

2017-02-24 22:32:54,752 [main] WARN org.apache.pig.PigServer - ATS is disabled since yarn.timeline-service.enabled set to false

grunt> ls /

hdfs://Ubuntu-01:9000/hbase <dir>

hdfs://Ubuntu-01:9000/output <dir>

hdfs://Ubuntu-01:9000/tmp <dir>

安装Mahout

http://mahout.apache.org/ apache-mahout-distribution-0.12.2.tar.gz

hadoop@Ubuntu-01:~$ cd /usr/local

hadoop@Ubuntu-01:/usr/local$ sudo mkdir mahout

hadoop@Ubuntu-01:/usr/local$ sudo chown hadoop:hadoop mahout/

hadoop@Ubuntu-01:/usr/local$ cd mahout/

hadoop@Ubuntu-01:/usr/local/mahout$ tar -zxvf apache-mahout-distribution-0.12.2.tar.gz

hadoop@Ubuntu-01:/usr/local/mahout$ sudo vim /etc/profile

export MAHOUT_HOME=/usr/local/mahout/apache-mahout-distribution-0.12.2

export PATH=$PATH:$MAHOUT_HOME/bin

hadoop@Ubuntu-01:/usr/local/mahout$ source /etc/profile

hadoop@Ubuntu-01:~$ cd

hadoop@Ubuntu-01:~$ start-dfs.sh

hadoop@Ubuntu-01:~$ start-yarn.sh

hadoop@Ubuntu-01:~$ mahout –help

Running on hadoop, using /usr/local/hadoop/hadoop-2.7.3/bin/hadoop and HADOOP_CONF_DIR=

MAHOUT-JOB: /usr/local/mahout/apache-mahout-distribution-0.12.2/mahout-examples-0.12.2-job.jar

Unknown program '--help' chosen.

Valid program names are:

arff.vector: : Generate Vectors from an ARFF file or directory

baumwelch: : Baum-Welch algorithm for unsupervised HMM training

canopy: : Canopy clustering

cat: : Print a file or resource as the logistic regression models would see it

cleansvd: : Cleanup and verification of SVD output

clusterdump: : Dump cluster output to text

clusterpp: : Groups Clustering Output In Clusters

cmdump: : Dump confusion matrix in HTML or text formats

cvb: : LDA via Collapsed Variation Bayes (0th deriv. approx)

cvb0_local: : LDA via Collapsed Variation Bayes, in memory locally.

describe: : Describe the fields and target variable in a data set

evaluateFactorization: : compute RMSE and MAE of a rating matrix factorization against probes

fkmeans: : Fuzzy K-means clustering

hmmpredict: : Generate random sequence of observations by given HMM

itemsimilarity: : Compute the item-item-similarities for item-based collaborative filtering

kmeans: : K-means clustering

lucene.vector: : Generate Vectors from a Lucene index

matrixdump: : Dump matrix in CSV format

matrixmult: : Take the product of two matrices

parallelALS: : ALS-WR factorization of a rating matrix

qualcluster: : Runs clustering experiments and summarizes results in a CSV

recommendfactorized: : Compute recommendations using the factorization of a rating matrix

recommenditembased: : Compute recommendations using item-based collaborative filtering

regexconverter: : Convert text files on a per line basis based on regular expressions

resplit: : Splits a set of SequenceFiles into a number of equal splits

rowid: : Map SequenceFile<Text,VectorWritable> to {SequenceFile<IntWritable,VectorWritable>, SequenceFile<IntWritable,Text>}

rowsimilarity: : Compute the pairwise similarities of the rows of a matrix

runAdaptiveLogistic: : Score new production data using a probably trained and validated AdaptivelogisticRegression model

runlogistic: : Run a logistic regression model against CSV data

seq2encoded: : Encoded Sparse Vector generation from Text sequence files

seq2sparse: : Sparse Vector generation from Text sequence files

seqdirectory: : Generate sequence files (of Text) from a directory

seqdumper: : Generic Sequence File dumper

seqmailarchives: : Creates SequenceFile from a directory containing gzipped mail archives

seqwiki: : Wikipedia xml dump to sequence file

spectralkmeans: : Spectral k-means clustering

split: : Split Input data into test and train sets

splitDataset: : split a rating dataset into training and probe parts

ssvd: : Stochastic SVD

streamingkmeans: : Streaming k-means clustering

svd: : Lanczos Singular Value Decomposition

testnb: : Test the Vector-based Bayes classifier

trainAdaptiveLogistic: : Train an AdaptivelogisticRegression model

trainlogistic: : Train a logistic regression using stochastic gradient descent

trainnb: : Train the Vector-based Bayes classifier

transpose: : Take the transpose of a matrix

validateAdaptiveLogistic: : Validate an AdaptivelogisticRegression model against hold-out data set

vecdist: : Compute the distances between a set of Vectors (or Cluster or Canopy, they must fit in memory) and a list of Vectors

vectordump: : Dump vectors from a sequence file to text

viterbi: : Viterbi decoding of hidden states from given output states sequence

hadoop@Ubuntu-01:~$ hadoop fs -mkdir /user

hadoop@Ubuntu-01:~$ hadoop fs -mkdir /user/Hadoop

hadoop@Ubuntu-01:~$ hadoop fs -mkdir /user/hadoop/testdata

hadoop@Ubuntu-01:~$ hadoop fs -put big-data-demo/synthetic_control.data.txt /user/hadoop/testdata

hadoop@Ubuntu-01:~$ hadoop jar /usr/local/mahout/apache-mahout-distribution-0.12.2/mahout-examples-0.12.2-job.jar org.apache.mahout.clustering.syntheticcontrol.kmeans.Job

hadoop@Ubuntu-01:~$ hadoop fs -ls -R output

-rw-r--r-- 2 hadoop supergroup 194 2017-03-01 22:21 output/_policy

drwxr-xr-x - hadoop supergroup 0 2017-03-01 22:21 output/clusteredPoints

-rw-r--r-- 2 hadoop supergroup 0 2017-03-01 22:21 output/clusteredPoints/_SUCCESS

-rw-r--r-- 2 hadoop supergroup 363282 2017-03-01 22:21 output/clusteredPoints/part-m-00000

drwxr-xr-x - hadoop supergroup 0 2017-03-01 22:16 output/clusters-0

-rw-r--r-- 2 hadoop supergroup 194 2017-03-01 22:16 output/clusters-0/_policy

-rw-r--r-- 2 hadoop supergroup 1891 2017-03-01 22:16 output/clusters-0/part-00000

-rw-r--r-- 2 hadoop supergroup 1891 2017-03-01 22:16 output/clusters-0/part-00001

-rw-r--r-- 2 hadoop supergroup 1891 2017-03-01 22:16 output/clusters-0/part-00002

-rw-r--r-- 2 hadoop supergroup 1891 2017-03-01 22:16 output/clusters-0/part-00003

-rw-r--r-- 2 hadoop supergroup 1891 2017-03-01 22:16 output/clusters-0/part-00004

-rw-r--r-- 2 hadoop supergroup 1891 2017-03-01 22:16 output/clusters-0/part-00005

drwxr-xr-x - hadoop supergroup 0 2017-03-01 22:16 output/clusters-1

-rw-r--r-- 2 hadoop supergroup 0 2017-03-01 22:16 output/clusters-1/_SUCCESS

-rw-r--r-- 2 hadoop supergroup 194 2017-03-01 22:16 output/clusters-1/_policy

-rw-r--r-- 2 hadoop supergroup 7581 2017-03-01 22:16 output/clusters-1/part-r-00000

drwxr-xr-x - hadoop supergroup 0 2017-03-01 22:21 output/clusters-10-final

-rw-r--r-- 2 hadoop supergroup 0 2017-03-01 22:21 output/clusters-10-final/_SUCCESS

-rw-r--r-- 2 hadoop supergroup 194 2017-03-01 22:21 output/clusters-10-final/_policy

-rw-r--r-- 2 hadoop supergroup 7581 2017-03-01 22:21 output/clusters-10-final/part-r-00000

drwxr-xr-x - hadoop supergroup 0 2017-03-01 22:17 output/clusters-2

-rw-r--r-- 2 hadoop supergroup 0 2017-03-01 22:17 output/clusters-2/_SUCCESS

-rw-r--r-- 2 hadoop supergroup 194 2017-03-01 22:17 output/clusters-2/_policy

-rw-r--r-- 2 hadoop supergroup 7581 2017-03-01 22:17 output/clusters-2/part-r-00000

drwxr-xr-x - hadoop supergroup 0 2017-03-01 22:17 output/clusters-3

-rw-r--r-- 2 hadoop supergroup 0 2017-03-01 22:17 output/clusters-3/_SUCCESS

-rw-r--r-- 2 hadoop supergroup 194 2017-03-01 22:17 output/clusters-3/_policy

-rw-r--r-- 2 hadoop supergroup 7581 2017-03-01 22:17 output/clusters-3/part-r-00000

drwxr-xr-x - hadoop supergroup 0 2017-03-01 22:18 output/clusters-4

-rw-r--r-- 2 hadoop supergroup 0 2017-03-01 22:18 output/clusters-4/_SUCCESS

-rw-r--r-- 2 hadoop supergroup 194 2017-03-01 22:18 output/clusters-4/_policy

-rw-r--r-- 2 hadoop supergroup 7581 2017-03-01 22:18 output/clusters-4/part-r-00000

drwxr-xr-x - hadoop supergroup 0 2017-03-01 22:18 output/clusters-5

-rw-r--r-- 2 hadoop supergroup 0 2017-03-01 22:18 output/clusters-5/_SUCCESS

-rw-r--r-- 2 hadoop supergroup 194 2017-03-01 22:18 output/clusters-5/_policy

-rw-r--r-- 2 hadoop supergroup 7581 2017-03-01 22:18 output/clusters-5/part-r-00000

drwxr-xr-x - hadoop supergroup 0 2017-03-01 22:19 output/clusters-6

-rw-r--r-- 2 hadoop supergroup 0 2017-03-01 22:19 output/clusters-6/_SUCCESS

-rw-r--r-- 2 hadoop supergroup 194 2017-03-01 22:19 output/clusters-6/_policy

-rw-r--r-- 2 hadoop supergroup 7581 2017-03-01 22:19 output/clusters-6/part-r-00000

drwxr-xr-x - hadoop supergroup 0 2017-03-01 22:19 output/clusters-7

-rw-r--r-- 2 hadoop supergroup 0 2017-03-01 22:19 output/clusters-7/_SUCCESS

-rw-r--r-- 2 hadoop supergroup 194 2017-03-01 22:19 output/clusters-7/_policy

-rw-r--r-- 2 hadoop supergroup 7581 2017-03-01 22:19 output/clusters-7/part-r-00000

drwxr-xr-x - hadoop supergroup 0 2017-03-01 22:20 output/clusters-8

-rw-r--r-- 2 hadoop supergroup 0 2017-03-01 22:20 output/clusters-8/_SUCCESS

-rw-r--r-- 2 hadoop supergroup 194 2017-03-01 22:20 output/clusters-8/_policy

-rw-r--r-- 2 hadoop supergroup 7581 2017-03-01 22:20 output/clusters-8/part-r-00000

drwxr-xr-x - hadoop supergroup 0 2017-03-01 22:20 output/clusters-9

-rw-r--r-- 2 hadoop supergroup 0 2017-03-01 22:20 output/clusters-9/_SUCCESS

-rw-r--r-- 2 hadoop supergroup 194 2017-03-01 22:20 output/clusters-9/_policy

-rw-r--r-- 2 hadoop supergroup 7581 2017-03-01 22:20 output/clusters-9/part-r-00000

drwxr-xr-x - hadoop supergroup 0 2017-03-01 22:16 output/data

-rw-r--r-- 2 hadoop supergroup 0 2017-03-01 22:16 output/data/_SUCCESS

-rw-r--r-- 2 hadoop supergroup 335470 2017-03-01 22:16 output/data/part-m-00000

drwxr-xr-x - hadoop supergroup 0 2017-03-01 22:16 output/random-seeds

-rw-r--r-- 2 hadoop supergroup 7723 2017-03-01 22:16 output/random-seeds/part-randomSeed

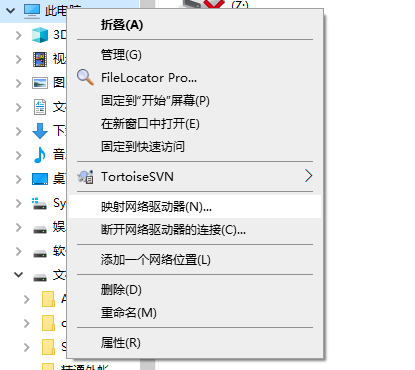

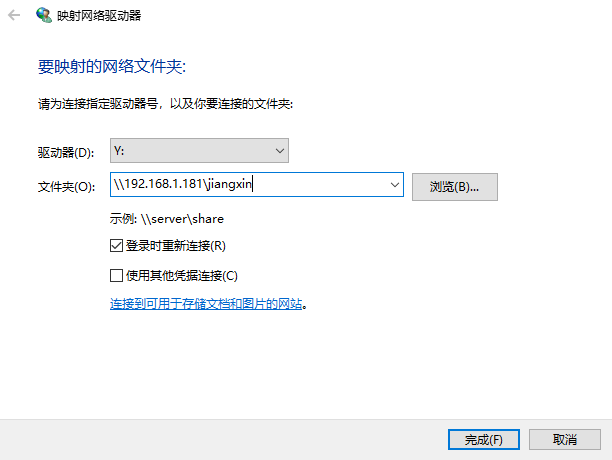

使用Ambari安装Hadoop集群

ubuntu-16.04.1-04 ubuntu-16.04.1-05 ubuntu-16.04.1-06三台虚拟机已经拆除,如果需要再次尝试Ambari需要重新创建虚拟机。

基础环境搭建

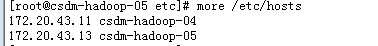

配置主机名,安装JDK,配置SSH互登陆等参考前文所述方法,配置SSH互登陆过程中要使用root用户,在配置过程中,如果scp命令不允许使用root用户远程访问,参考下面的方法解决:

root@Ubuntu-04:~# vi /etc/ssh/sshd_config

# Authentication:

LoginGraceTime 120

PermitRootLogin yes

#PermitRootLogin prohibit-password

StrictModes yes

重启 ssh 服务

root@Ubuntu-04:~# service ssh restart

另外由于Ambari最新版本的安装需要Maven,所以在某个节点上使用下面方式安装Maven:

root@Ubuntu-04:~# mkdir /usr/local/maven

root@Ubuntu-04:~# cd /usr/local/maven/

root@Ubuntu-04:/usr/local/maven# ls

apache-maven-3.5.0-bin.tar.gz

root@Ubuntu-04:/usr/local/maven# tar -zxvf apache-maven-3.5.0-bin.tar.gz

root@Ubuntu-04:/usr/local/maven# sudo vim /etc/profile

export M2_HOME=/usr/local/maven/apache-maven-3.5.0

export PATH=$PATH:$M2_HOME/bin

root@Ubuntu-04:/usr/local/maven# source /etc/profile

安装Ambari

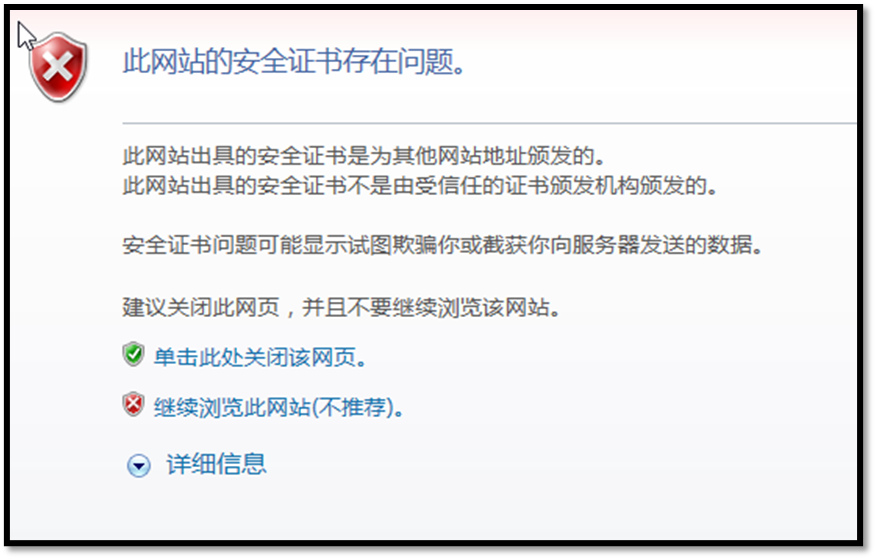

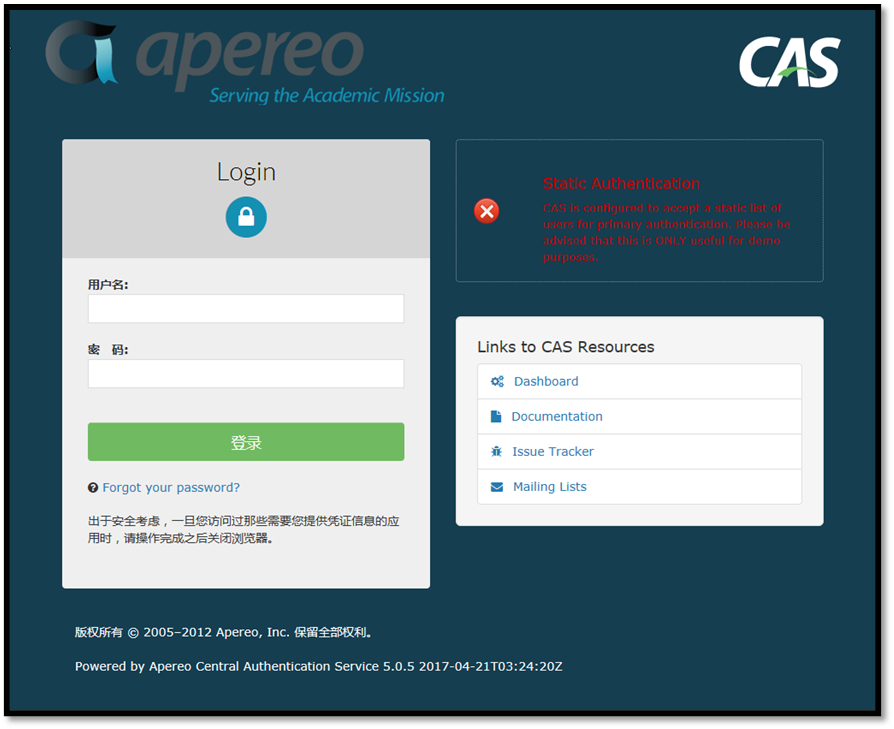

主要参考官方文档:https://cwiki.apache.org/confluence/display/AMBARI/Installation+Guide+for+Ambari+2.5.1

在其中一个节点(安装maven的节点)下载ambari的源码包,并打包成deb包,拷贝到所有其它节点进行安装。

root@Ubuntu-04:~# mkdir /usr/local/ambary

root@Ubuntu-04:~# cd /usr/local/ambari/